Feature Stories

Faith Singer-Villalobos

Related Links

Matters of the Heart

Using computational models and tools to diagnose heart conditions

Your heart beats 100,000 times a day, 35 million times a year. During your lifetime, it will beat more than three billion times.

“The heart is amazing if you think about it — it’s a model of evolutionary design,” said Rajat Mittal, a professor of Mechanical Engineering at Johns Hopkins University. “From a functional viewpoint, the heart is primarily a pump. But imagine a pump that functions for 80 to 90 years without breaking down and can adapt, minute to minute and year to year, to variations in operating conditions.”

For most people, the heart will beat again and again without any malfunction at all. But what happens when it fails? What tools do physicians have to identify problems and fix them before they cause illness and death? And how are those tools developed and tested?

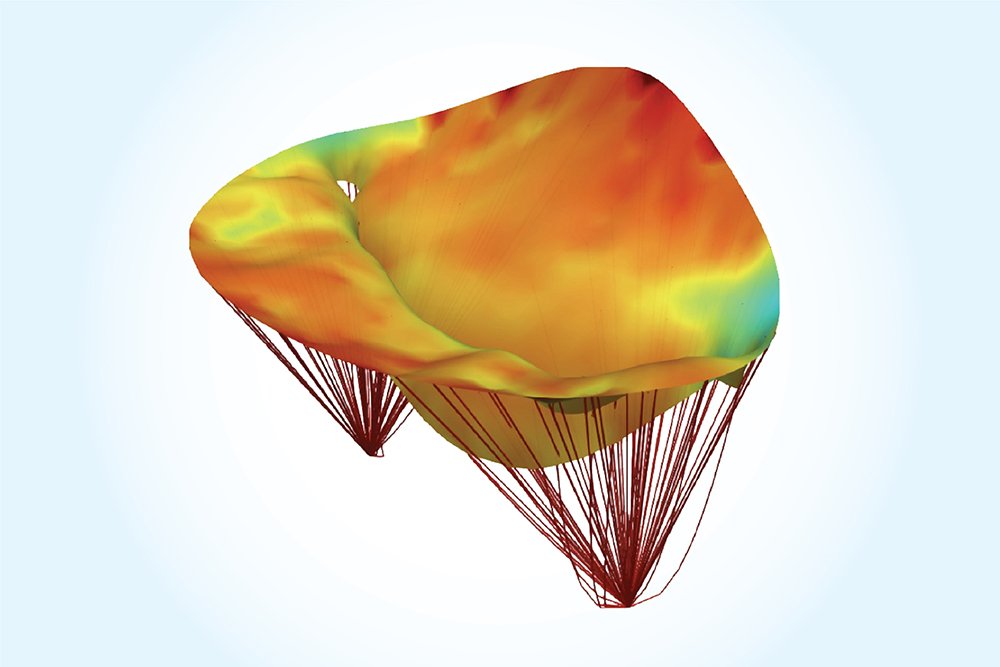

Using sophisticated software, massive datasets, and advanced computing, scientists and engineers are building computational models that unravel complex medical questions and help diagnosis and treat heart attacks and strokes. Over the last several years, in work supported by multiple grants from NSF, Mittal has been working to understand fundamental aspects of how the heart functions to develop better treatment strategies.

He started by asking fundamental questions about the morphology, or form, of the heart, such as: What does the engineering of the heart tell us about how the organ functions? Why do valves function the way they do? And what effect does the contraction and expansion of the heart have on the flow and pumping efficiency of blood?

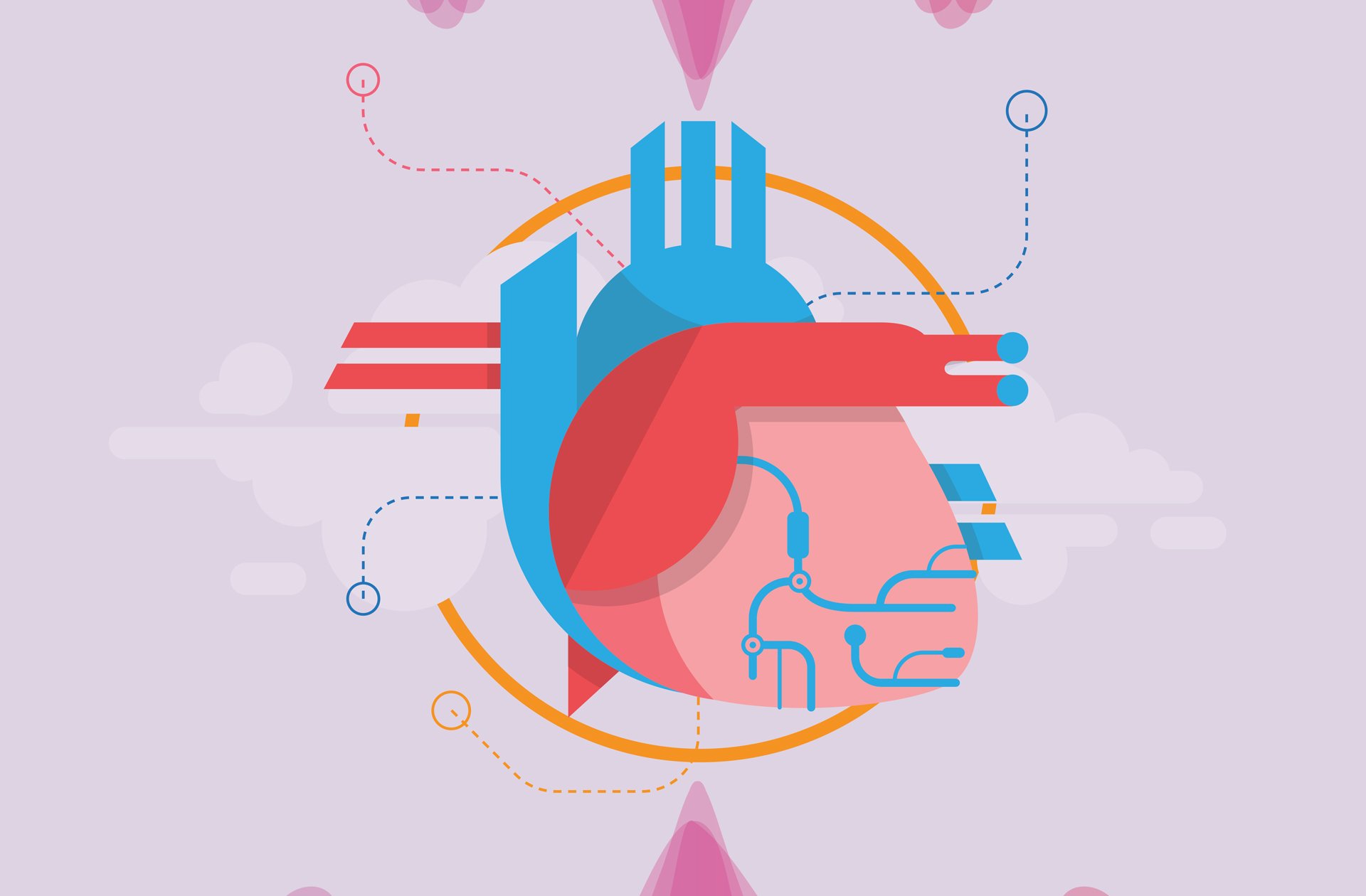

High performance computing is an essential tool in Mittal’s arsenal to analyze the workings of the heart with precision. Using supercomputers at TACC, his team has made progress in understanding the heart and in the process uncovered new knowledge about why it falters. For instance, contrary to popular opinion, pumping efficiency might not be the biggest driver of heart health.

“What we’re finding is that flow stagnation is a very important driver in the way the heart functions,” Mittal said. “Flow stasis in the human cardiovascular system is disastrous because it causes clots. Once clots form they can block arteries and lead to strokes or heart attacks. The elimination of blood clots is an essential function of the cardiovascular system.”

His team proposed a new metric, validated by clinical data, that can predict the risk of clots based on flow stasis. Mittal has applied for several patents to commercialize technologies associated with these cardiac diagnostic tools.

“We found that in a healthy heart, there will be no stagnation,” Mittal said. “The fluid dynamics in a healthy heart virtually eliminates flow stagnation and clot formation. Ninety-nine percent of the blood that comes into the heart gets flushed out within five seconds.” For cardiologists and researchers, this re-thinking of how to predict blood clots could be a game changer. “The viewpoint that blood clot formation is a stronger driver of the functional morphology of the heart is receiving more attention from the broader scientific community,” Mittal said.

Predicting Plaque Ruptures

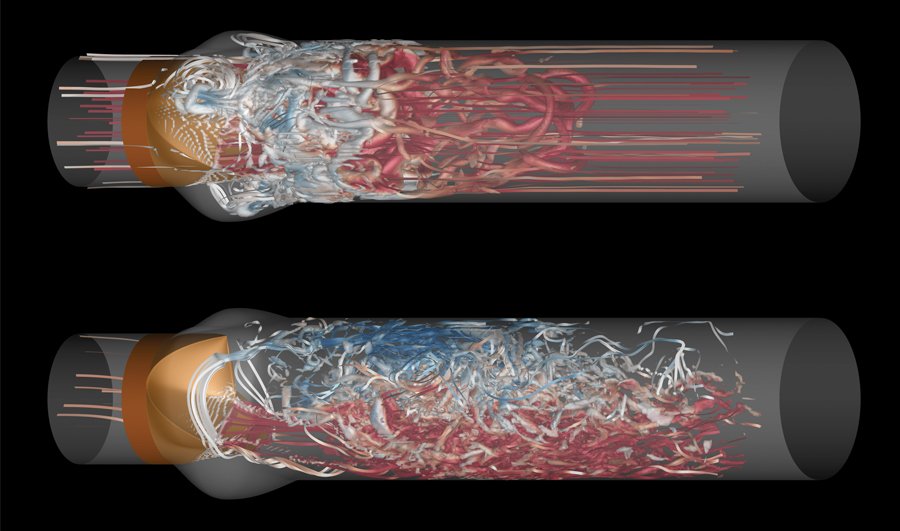

If clots caused by stagnation are one significant factor in cardiac ailments, plaques — a collection of white blood cells and lipids in the wall of an artery that are particularly unstable — are another. About 70 percent of heart attacks are caused by vulnerable plaques, according to Tom Hughes, a professor of Aerospace Engineering and Engineering Mechanics in the Oden Institute for Computational Science and Engineering at UT Austin.

“For the most part, vulnerable plaques have not been diagnosed by imaging alone,” Hughes said. “Many people have them, but the question is: Are they likely to rupture? That’s what has to be discerned.”

“TACC’s platforms are essential to my work. Moving from imaging to computational models and analyzing on a patient-specific basis — that to me is the future of medicine.”

These plaques form in a pool in the artery wall. That pool is separated from the bloodstream by a thin fibrous cap that can become inflamed. When these thin caps rupture, a blood clot follows, which can cause a heart attack or stroke.

With a new NSF grant in tow and working in collaboration with HeartFlow, a medical technology company, Hughes and his team will create mathematical models of about 1,600 patients who have vulnerable plaques in their coronary arteries.

“This is an unprecedented amount of data, so this could be a significant development,” Hughes said. “HeartFlow obtained the computed tomography (CT) images and is doing the blood flow analysis, and we’re creating the mathematical models of the plaques and the fibrous caps from the imaging data.”

According to Hughes, in the mid-1990’s science could only image the larger arteries like the aorta. As imaging became more refined in the 2000’s, researchers started to image the smaller coronary vessels with CT. These coronary vessels are the key to heart attacks and strokes. Once researchers could obtain accurate images, they could do patient-specific modeling of the coronary vasculature.

Hughes hopes that his team can move from imaging and mathematical analysis of plaques to a computational procedure that can predict the probability that plaque will rupture in a given timeframe.

“In a way it’s like trying to predict an earthquake,” Hughes said. “I hope what we obtain from the data and our analyses is realistic guidelines for an individual patient. For example, we’d like to let a patient know that there’s a 50 percent chance that vulnerable plaque will rupture in six months to a year.”

TACC resources play a critical role in Hughes’ research. He has been using supercomputers at TACC for 17 years, since he came to UT Austin from Stanford University.

“TACC’s platforms are essential to my work,” Hughes said. “Moving from imaging to computational models and analyzing on a patient-specific basis — that to me is the future of medicine.”

Patient-Specific Treatments for Leakage in the Heart

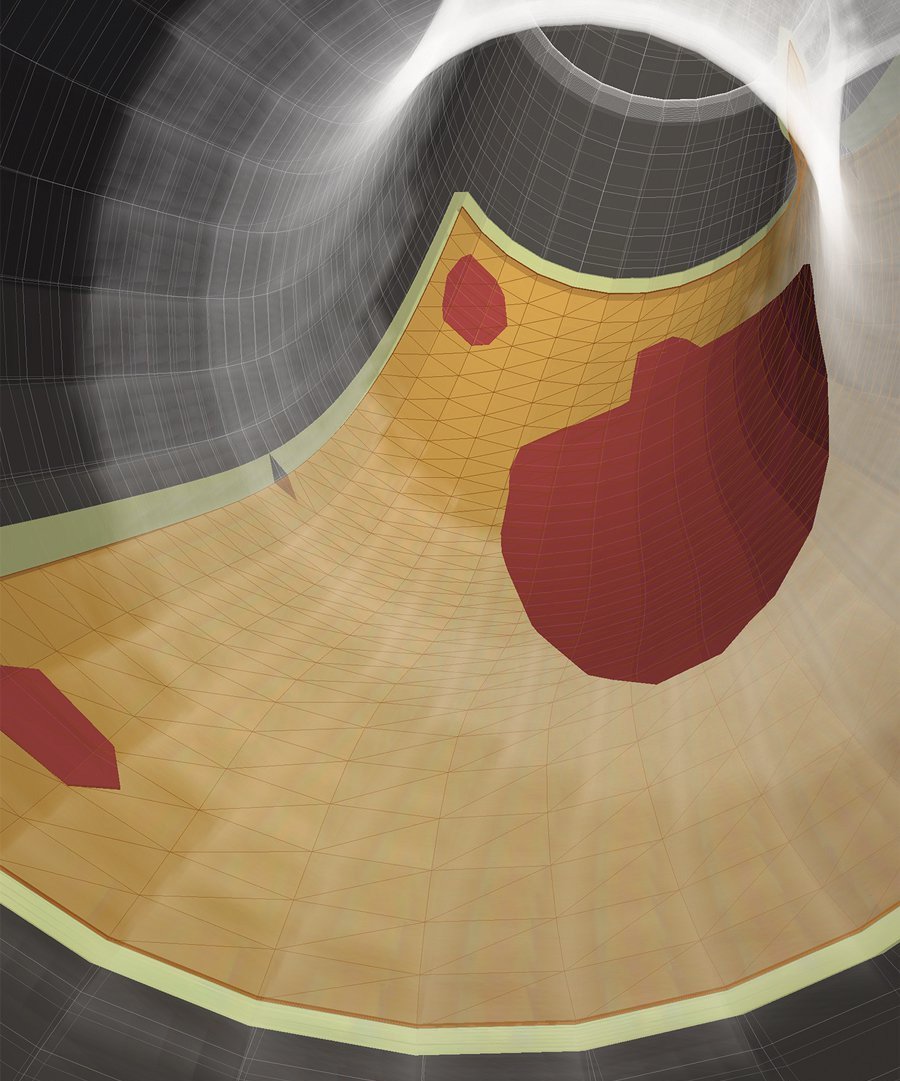

The mitral valve regulates blood flow from one chamber of the heart, the left atrium, to another one, called the left ventricle. The most common heart valve disease is called mitral regurgitation, where blood leaks backward through the mitral valve each time the left ventricle contracts.

A particularly lethal form of this disease is caused after a heart attack when portions of the left ventricle wall die, causing the mitral valve to not close properly. To date, there are no consistently acceptable methods to repair the mitral valve.

Michael Sacks, professor of Biomedical Engineering and director of the Willerson Center for Cardiovascular Modeling and Simulation at the Oden Institute, is working to develop high fidelity computational models that predict optimal surgical approaches to repair the mitral valve.

“At present, surgical repairs are done largely empirically,” Sacks said. “No one has developed any real basis for rationally designing mitral valve repair based on pre-surgical information, and most importantly, determining which repair method is best for a particular patient.”

Currently, during open heart surgery, surgeons attempt to repair the mitral valve by inserting an annuloplasty ring to reduce the size of the mitral valve opening to make it competent again. Surgeons will perform pre-surgical imaging to get an idea of the basic geometric features of the diseased valve to help guide the procedure, but there is minimal analysis performed.

Paradoxically, some patients do very well with the surgery, and some don’t do well at all. “The problem is doctors have no way to predict these outcomes,” Sacks said. Moreover, there are new minimally invasive procedures that do not require open heart surgery to repair the mitral valve, yet it is unknown how to best utilize these procedures for each patient.

With a new grant from the National Institutes of Health and the Moss Heart Foundation, Sacks and his team are trying to solve this problem. They are constructing high fidelity computational models of the mitral valve directly from the available clinical images. Using these models, they can better predict what the post-surgical state will look like based on the pre-surgical data.

“This is a relatively new development in computational medicine — to be able to directly predict a surgical outcome,” Sacks said. “Additionally, we’re pushing the models to determine what the mitral valve is going to look like months to a year after the repair.” Such long-term models are the key to developing the best procedures for each patient.

Sacks wants to develop models that can be solved in a timeframe relevant for the clinician. This means taking model development and computational time from weeks or days to hours.

“This is where supercomputing technology comes into play,” Sacks said. “Our current models are computationally expensive, requiring many hours to run, making them unusable for routine clinical use. Supercomputer technology allows us to develop each model and perform the required simulations with both high fidelity and speed.”

The goal is to develop the model, evaluate its predictive capability, and then develop software that can be used in clinical trials — a first step towards saving lives.

How can physicians harness computation to develop better diagnostics? Can these same tools provide clinicians with a way to treat diseases that wasn’t available before?

Mittal, Hughes, and Sacks are among a growing cadre of researchers using computational models to understand complex interactions in the heart, the nature of which is often highly multifaceted and non-intuitive. Models allow them to understand disease mechanisms, aid in diagnosis, and test the effectiveness of different therapies. By using computer models, potential therapies can be explored "in silico" at high speed. The results can then be used to guide further experiments, gather new data, and refine the models until they are highly effective at saving lives.