Feature Stories

Aaron Dubrow

Related Links

Operation Frontera

TACC launches fastest academic supercomputer in the world

It was five months into the construction of Frontera. 364,000 pounds of aluminum, steel, and silicon had already been rolled across the reinforced floor of the TACC data center tucked away on The University of Texas J.J. Pickle Research Campus in North Austin. Staff had unspooled more than 50 miles of networking cable and carefully connected the 16,000 processors that make up TACC’s latest supercomputer.

“The system was fully networked, the software stack was set, and I realized we were preparing to run one of the largest computations that had ever been performed in the history of civilization,” TACC’s Executive Director Dan Stanzione said. “It was a humbling and awe-inspiring feeling to harness this amount of cutting-edge technology and apply it to a single problem.”

The problem, known as LINPACK, is a dense system of linear algebra equations that enables researchers to compare computing systems in an apples-to-apples way. Using all of its nearly half million processing cores, Frontera was able to calculate 23.5 quadrillion mathematical operations per second — 23,500,000,000,000,000 multiplications and divisions, roughly the same as a person could achieve if they performed one calculation every second for a billion years without stopping.

The calculation secured the #5 spot on the June 2019 Top500 list of the world’s fastest supercomputers, making Frontera the highest-ranked Dell system and the fastest primarily Intel-based system ever. Given that the four systems that precede it on the Top500 list use new types of processors that are not widely adopted in science, Frontera is arguably the most powerful general purpose supercomputer in the world.

The results cemented The University of Texas’ position as the leading university for advanced computing in the world, with the #1 and #2 (Stampede2) supercomputers at any U.S. university. Stanzione estimates that 80 percent of all calculations run by U.S. university researchers on high-performance computing systems are powered by TACC supercomputers.

But Frontera is special in its own right. “Academic researchers have never had a tool this powerful to solve the problems that matter to them," Stanzione said.

The Build

Now in its 19th year, with dozens of supercomputer deployments under its belt, TACC is no novice in constructing these massive scientific instruments. Nonetheless, building Frontera required a herculean effort on the part of the TACC staff and its technology partners; some innovative engineering solutions; and even a bit of luck.

Never before had TACC built a system as quickly, or compressed so much compute power into such a small physical space. Plans (and promises) for Frontera’s deployment had been crafted two years before the chips and components that composed it came off the assembly line.

"Fundamental research transforms the future. Every powerful, new apparatus we create — be it a computer, telescope, gravitational wave observatory — opens the door to more powerful things."

“Designing a system like Frontera takes a bit of wishful thinking,” said Tommy Minyard, TACC director of Advanced Computing Systems. “We have a sense of our technology partners’ roadmaps, and have to plan for any bumps that may occur along the way.”

TACC learned in August 2018 that it had won the $60 million award from NSF to build the agency’s new leadership-class supercomputer, something few in the community expected. The announcement kicked off a massive overhaul of TACC’s data center — clearing space for Frontera that previously was occupied by Stampede1, upgrading the power and cooling systems in the machine room for an ultra-dense cluster, and reinforcing the floors for larger, heavier racks than TACC had ever used before.

The machine room upgrades were completed by January 2019, and the first racks of storage and networking hardware arrived shortly after. However, factory delays held up the servers until April — only four months before TACC had committed to presenting an operational machine.

One by one, the 10-foot-tall servers, each a black monolith, rolled in, eventually covering 2,400 square feet, and leading some TACC staff to dub the area the “caves of steel.” With time of the essence, TACC’s Large Scale Systems group, which led the construction of Frontera, organized teams of TACC volunteers every day over nearly two months to help wire the system, unspooling, de-kinking, and installing more than 50 miles of high-speed, fiber-optic networking cable.

“It was a team effort,” said Laura Timm Branch, TACC Senior Systems Administrator. “Almost everyone working at TACC played a role in getting the system built in time.”

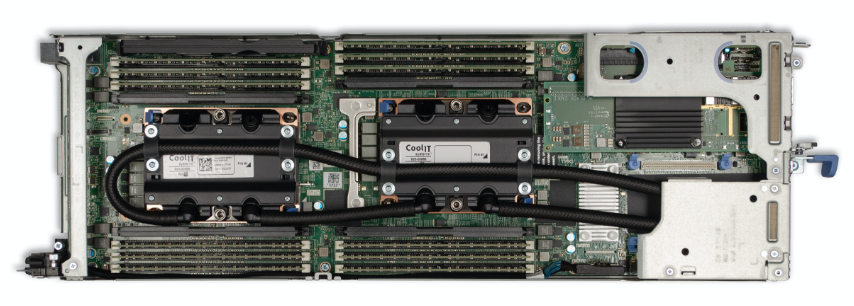

For such a dense system, packing many more processors into a smaller footprint than ever before, TACC had to deploy innovative cooling technologies. These include liquid cooled copper plates that sit on each Xeon chip, circulating fluid that whisks the heat away, and requiring nearly 2,500 liters of coolant; as well as fans on each rack provided by Cooltera.

“With 60,000 watts in a cabinet, it means everything we've done before in cooling was sort of out the window,” Stanzione said.

The Benchmarks

Once the systems group had completed the construction, TACC’s High Performance Computing group took over, installing the software stack, testing the system and, together with the Large Scale Systems group, debugging the problems that inevitably arose.

TACC had a mandate from NSF to build a system that could run a series of scientific codes three times faster than Blue Waters, the previous leadership-class NSF-funded system.

“Installing scientific software isn’t like downloading a program or app for your desktop or smart phone,” said Bill Barth, TACC’s director of Future Technologies. “Supercomputers are like race cars. They need careful tuning and optimizing of the hardware and software to perform at scale and peak speed across thousands of processors. Fortunately, TACC’s HPC team is among the best in the world at this type of task.”

One by one, the team exceeded the previous high marks on the 12 benchmark codes and eventually eclipsed them by an average of 4.3 times. Simultaneously, they had to make sure the system was stable, while making it available to the 36 science teams selected by NSF for early access to Frontera.

With Frontera completing 99.9 percent of its jobs, and providing early scientists more and more computing power — often at a scale they never had been able to access before — TACC turned its attention to Frontera’s two initial subsystems, which provide additional performance and allow TACC to explore alternate computational architectures for the future.

An NVIDIA-based system with 360 Quadro RTX 5000 GPUs (graphics processing units) submerged in liquid coolant racks developed by GRC (Green Revolution Cooling) allows TACC to test more efficient ways to cool future systems, and assess community interest in single-precision optimized computing.

An IBM POWER9-hosted system called Longhorn, with 448 NVIDIA V100 GPUs, provides additional performance. These sub-systems accelerate artificial intelligence, machine learning, and molecular dynamics research for Frontera researchers in areas ranging from cancer treatment to biophysics.

The integration of additional testbed systems, including FPGA (field programmable gate arrays) and ARM processors, is underway and will continue throughout Frontera’s lifespan.

“Frontera will continue to grow and evolve,” said John West, TACC’s director of Strategic Initiatives, “providing more computer power and experimenting with new ways to compute.”

The Launch

On September 3, 2019, surrounded by partners from government, academia, and industry, Stanzione launched Frontera, announcing the system formally accepted and ready for business.

“You might ask, what drives us to keep improving? To keep building faster and more dynamic supercomputers?” said UT President Greg Fenves. “Well, the answer is simple. It is our fundamental desire to understand the greatest mysteries of our Universe, and our collective need to solve the greatest challenges facing humanity – from health care to climate change. That’s what Frontera was built for.”

"Fundamental research transforms the future,” said Fleming Crim, NSF’s chief operating officer. “Every powerful, new apparatus we create — be it a computer, telescope, gravitational wave observatory — opens the door to more powerful things."

The Future

Frontera, the system, was only the beginning. Frontera, the project, marches on. A $60 million award procured the supercomputer. A further $60 million award from NSF funds TACC to operate and maintain the system and support researchers over the next five years. An additional $2 million per year award from NSF supports Phase 2 planning — an effort to gather scientific and technical requirements and design a potential follow-on system with 10 times the capabilities of Frontera.

“We recognize the awesome responsibility that comes with this project,” Stanzione said. “We are here to serve computational science and engineering across the U.S. academic research enterprise, lead the array of NSF computational resources and communities of practice around them, and envision the future of leadership-class cyberinfrastructure resources for NSF.”

Frontera entered full operation in October 2019, supporting dozens of research groups from universities across the U.S. Peer-review committees allocate 80 percent of the compute time of Frontera to the most significant challenges that require the system for days on end; researchers ready to scale up to the largest academic system; and collaborations with large-scale international science experiments including the Large Hadron Collider and the Laser Interferometer Gravitational Observatory.

The remaining 20 percent of the time on Frontera will be reserved for a host of other efforts, including emergency access to Frontera by natural hazards forecasters in the face of hurricanes and earthquakes; and use by industrial partners testing new codes or technologies. Since science never rests in its relentless search for truth, the effort to envision and design a next-generation system 10 times more capable to replace Frontera around 2025 is already underway.

What will computer chips, memory, and storage look like in five years? What emerging technologies might rival, or complement, CPUs and GPUs? And how are scientific workloads evolving in the face of new paradigms like machine and deep learning?

Time will tell, and TACC’s leaders will have to know long before the rest of us to begin the cycle of designing, building, and deploying the most powerful supercomputer for science.

In his remarks at the Frontera dedication, Stanzione quoted the 19th century chemist and inventor Humphry Davy: “Nothing tends so much to the advancement of knowledge as the application of a new instrument. The native intellectual powers of men in different times are not so much the causes of the different success of their labors, as the peculiar nature of the means and artificial resources in their possession.”

As a remarkable and unique instrument, Frontera is poised to open new vistas in science.