Feature Stories

Aaron Dubrow

Related Articles

Dispatches From Frontera

Research teams use Frontera to run unprecedented simulations

TACC’s Frontera supercomputer launched in fall 2019 with great expectations.

As the leadership-class system in the U.S. National Science Foundation’s computing portfolio — debuting as the fifth fastest supercomputer in the world — Frontera was built to power academic research at a scale previously unachievable.

"State-of-the-art supercomputing resources like Frontera are an invaluable resource for researchers," said Alvin Yu, a computational biologist in the Voth Group at the University of Chicago. “Frontera enables large-scale simulations that examine processes that simply cannot be performed on smaller supercomputing resources."

Hundreds of research groups have computed on Frontera since it powered up, contributing to practically every area of science. Some of these projects achieved the largest-ever simulations in their field; others used Frontera to attempt a problem for the first time ever. Together, they exhibit the unique potential of the system to advance science.

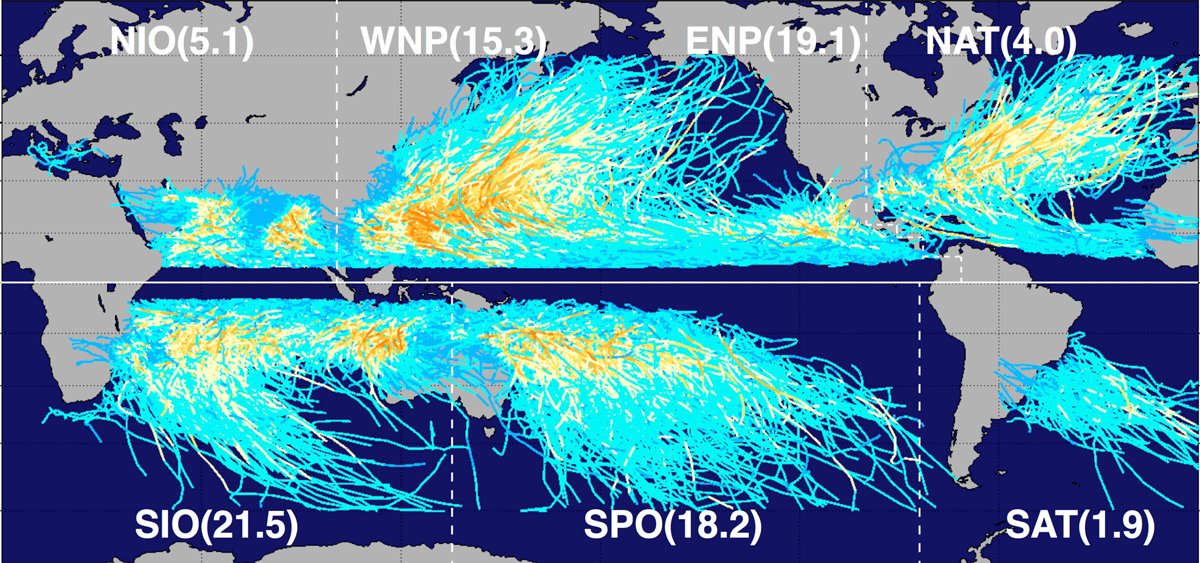

Computing our Climate Future

Ping Chang is a leading climate researcher and professor of Oceanography and Atmospheric Sciences at Texas A&M University.

Chang and an international team of collaborators used Frontera to forecast the Earth’s climate from 1950 to 2050 using a newly configured high-resolution version of the Community Earth System Model developed at the National Center for Atmospheric Research (NCAR) with support from NSF and the Department of Energy.

The simulation is roughly 160 times more detailed than the ones used for official climate assessments by the Intergovernmental Panel on Climate Change. Its increased resolution lets the team track the future evolution of weather extremes, such as hurricanes, and investigate phenomena like atmospheric rivers and ocean currents for the first time.

It also requires more than 100 times more computing power than previous models. Frontera is one of only a handful of systems in the world that can run the simulation in months rather than years.

The Frontera-produced simulations found that warming temperatures and more CO2 in the atmosphere lead to changes to the Atlantic Meridional Overturning Circulation, a major ocean current that transports heat and salt from the tropics to the polar regions of the Northern Hemisphere.

"Understanding the climate problem is one of the top priorities for science and society," Chang said. "Having a better ability to project into the future is very important and using large computing systems like Frontera is how we can do this."

From the Atmosphere to the Earth’s Crust

The Southern California Earthquake Center, or SCEC, is one of the preeminent hubs of earth science research. For over three decades, the center has relied heavily on computational models, and advanced codes running on HPC, to better understand earthquakes and their risks.

SCEC scholars were early adopters of the Frontera system. “We’ve made a lot of progress on Frontera in determining what kind of earthquakes we can expect, on which fault, when,” said Christine Goulet, executive director for Applied Science at SCEC.

“To do that, we use an earthquake simulator for which the input is our best knowledge of fault locations and geometries. We launch a simulation of hundreds of thousands of years, and just let the code transfer the stress from one fault to another, creating earthquake ruptures in the process.”

The result is called a synthetic earthquake catalog — a record that an earthquake occurred at a certain place and time with a certain magnitude. The catalogs that the SCEC team have amassed using Frontera and the Blue Waters system at the National Center for Supercomputing Applications are among the largest ever made for California, according to Goulet.

The work is helping to determine the probability of an earthquake occurring along any of California’s hundreds of active faults, the scale that could be expected, and how it may trigger other quakes.

It takes a lot of processing power to run these kinds of simulations.

“Sometimes the problem is too big. Sometimes we just have too many small problems to solve. Even though we could run them in parallel, the fact that it would take so long on a regular cluster would make it impossible to make progress if we didn’t have systems like Frontera.”

From the Global to the Nanoscale

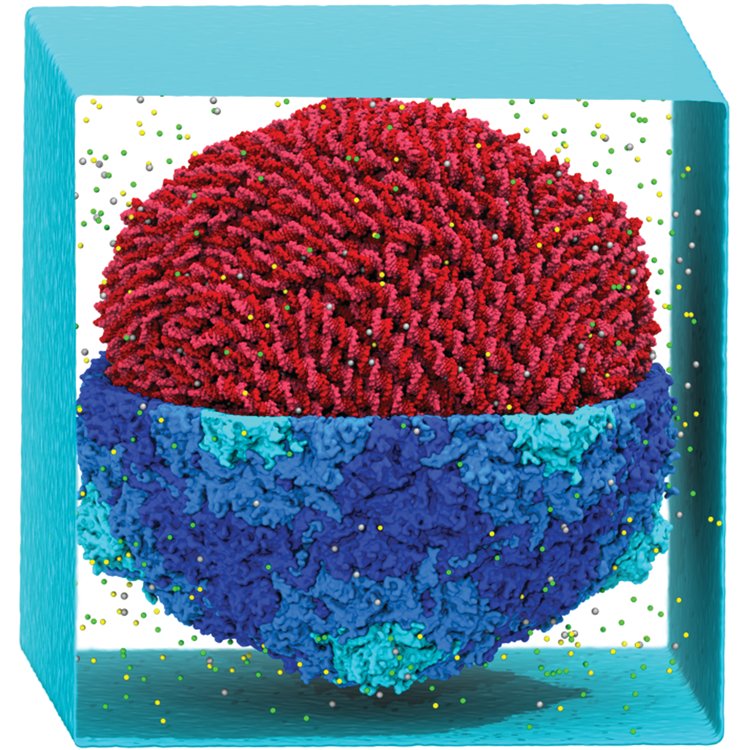

“I am writing to share some exciting recent results that have been solely possible due to the impressive performance scaling on Frontera.” So began Aleksei Aksimentiev, professor of Physics at the University of Illinois at Urbana-Champaign, in a note to TACC.

Taking advantage of his early access allocation on Frontera, Aksimentiev was able to realize a landmark achievement in the area of computational biophysics — a full, atomic-level simulation of a mature virus, including its DNA.

Aksimentiev’s team computationally modelled the bacteriophage HK97, a virus that infects E. coli, that has a genome of around 40,000 DNA base pairs packed inside a protein capsid.

“Viruses have been modeled successfully before, but only the capsid, the lipid envelope,” he said. “No one has ever looked at what’s inside it with a simulation.”

Including the viral DNA, the whole system consisted of roughly 26 million atoms — large-scale by any measure. Aksimentiev’s team not only modeled the virus, they simulated its mechanics for a microsecond — one millionth of a second — and in the process shone a light on a biological mystery: how the virus packs its genome into a tiny protein shell.

It required a month of compute time on Frontera to run the simulation. But previously, it took Aksimentiev more than a year on the most powerful machines in the U.S. to simulate this timescale.

“It’s a unique instrument to look at how things operate on the microscale and explore biological processes in their native environments within a human cell,” Aksimentiev said.

Many other scientists have achieved computational milestones on Frontera. Biologist Greg Voth from the University of Chicago used Frontera to show how some species of monkey prevent HIV infection. Daniel Bodony at the University of Illinois, at Urbana-Champaign replicated NASA experimental flight tests in a virtual Mach 6 tunnel. And University of Utah’s Carlton deTar ran particle physics simulations 16 times larger than any he had previously calculated, helping to predict the mass of subatomic particles.

These projects, as different as they are, share a key characteristic: they reveal aspects of the Universe, or provide predictions and design guidance, by applying computing at a massive scale.

Only one year into Frontera’s five-year life, more groundbreaking computational science is anticipated.

"On Frontera, we're running some of the largest science problems ever," said Dan Stanzione, TACC executive director. "TACC worked hard to support calculations that use half, and in some cases up to 97 percent, of the entire system. This is an unprecedented scale for us and for our users, but it’s the scale we built Frontera for – the biggest problems in the world. It's what differentiates how we use Frontera from every other system."