Urgent Computing

When time’s running out for answers

The Space Shuttle Endeavor blasted off on May 16, 2011, for its 25th and final mission, with astronaut Mark Kelly in command for his last spaceflight. Trouble brewed from the launch, where footage showed possible damage to the heat shield – similar to what had happened to the Space Shuttle Columbia in 2003. Fortunately, NASA had increased their vigilance on shuttle heat shields since that accident, which was caused by heat shield damage from fuel tank debris at launch.

A scientific assessment had to be made quickly about whether the space shuttle needed repairs or worse — a rescue mission. Endeavor’s crew inspected the damaged heat tiles with the Orbiter Boom Sensor System, a post-Columbia addition. Its cameras and lasers scanned the tiles and beamed that information back to mission control.

The inspection took about two hours, and NASA cleared Endeavor for what ended as a safe re-entry back to Earth.

Ground Control to Advanced Computing

It showed foresight to anticipate a back-up plan that used computer analysis on the ground to avert a catastrophic disaster in space. It also showed how advanced computing can quickly transform into urgent computing when time is of the essence.

Centers like TACC have long focused on basic research, powering scientific discoveries in materials science, physics, and astronomy, and driving innovation in our nation. But since the 2003 Columbia explosion, when TACC resources were used to guide investigators to shuttle debris in East Texas, the center has engaged in urgent computing.

For agencies such as the National Oceanic and Atmospheric Administration (NOAA) or the U.S. Geological Survey, urgent computing is a critical mission. For TACC, it’s an opportunity to be a better global citizen and make a major difference in times of crisis.

Building the systems and cyberinfrastructure needed for rapid response before a disaster is key to a successful outcome — analogous to the Orbiter Boom on Endeavor that scanned for trouble.

Whether for COVID-19 research, extreme weather and earthquake forecasting, or mitigating human-made disasters, TACC has shown that its supercomputers are ready and its leadership is willing to respond to urgent crises.

COVID-19

The COVID-19 pandemic has ravaged lives and economies worldwide. In the U.S. alone, more than 220,000 people have died from COVID-19 and millions are out of work.

Scientists were quick to join the effort to stop the pandemic. Thousands pivoted their research to COVID-19, applying their knowledge and insights to the novel virus. Many of these projects required large-scale computing and TACC, early on in the pandemic, committed its support, helping more than 50 COVID-19 research projects that track the disease and crack open the virus’ defenses.

Coronavirus Public Health

The UT Austin COVID-19 Modeling Consortium has produced some of the most accurate local and national forecasts of COVID-19 transmissions, hospitalizations, and deaths. Its research has supported timely decision-making by local, state, and national leaders, and it has been featured in major national news stories on CNN, the New York Times, and other publications.

UT Austin epidemiologist Lauren Ancel Meyers leads the UT consortium. It relies on TACC for high performance computing and analytics, using data from anonymized mobile-phone data, case counts, and hospitalization data from Johns Hopkins University.

Meyers’ team presented a timely COVID-19 risk report for school reopenings to the Texas Education Agency in July 2020. Widely reported by local and state media, it estimated how many students and teachers would start school infected with COVID-19 and it gave parents and decision-makers a clearer picture of the potential risk of infection in schools.

In April 2020, the consortium projected healthcare demands from COVID-19 in a report to Austin and Travis County. It indicated that Austin hospitals might be overwhelmed if social distancing measures were not put in place.

"We developed this framework to ensure that COVID-19 never overwhelms local health care capacity while minimizing the economic and societal costs of strict social-distancing measures,” Meyers said.

Separately, TACC has worked with the UT Austin Center for Space Research (CSR) to provide crisis decision support to the Texas State Operations Center.

“By working with TACC, we were able to provide rapidly updated predictions of future COVID-19 impacts for each of the state’s 22 Trauma Service Areas based on pandemic forecasts produced by the UT COVID-19 Consortium,” Gordon Wells, research associate at CSR, added.

Spike and Envelope

The SARS-CoV-2 virus that causes COVID-19 is new to science, discovered in December 2019 and first sequenced in January 2020. The pressure on scientists to find COVID-19 treatments, vaccines, and a cure has never been greater.

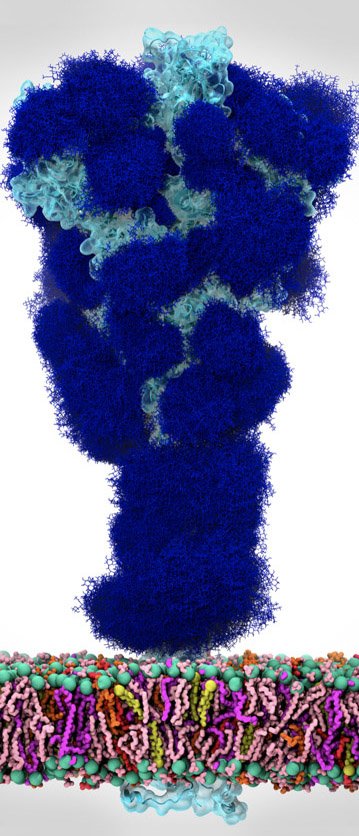

Typically, vaccines take years to develop, and that time is a luxury the world does not have. A computer model of the coronavirus developed by the Amaro Lab at the University of California, San Diego using TACC’s Frontera supercomputer is helping to accelerate the development of new treatments.

NSF’s research cyberinfrastructure, in which TACC plays a leading role, has mobilized swiftly to respond to the pandemic, demonstrating the strategic importance of NSF’s investments as well as the agility of the computational ecosystem.

Early results by Rommie Amaro in June 2020 on the coronavirus spike protein revealed a new function for molecules called glycans. They coat the spike protein and help it elude the immune system. Amaro found they also change shape in a way that helps the virus bind with the ACE2 receptor on human cells. This binding is the first step to infection.

NSF Chief Operating Officer Fleming Crim commented on TACC’s urgency in supporting COVID-19 research: ”NSF’s research cyberinfrastructure, in which TACC plays a leading role, has mobilized swiftly to respond to the pandemic, demonstrating the strategic importance of NSF’s investments as well as the agility of the computational ecosystem. This response is just one example of the way that NSF investments in fundamental research meet national needs."

Protein Therapeutic Design

Function follows form for proteins, which can fold in nearly countless ways. That immensity of possibility means it can take months for computers to comb through all the ways a protein folds and the behaviors they give rise to. It’s arduous computational work, but doing so helps scientists find drug targets for vaccines and treatments.

Biochemist David Baker of the University of Washington is one of the pioneers of using computers to predict how proteins fold. Using TACC’s Stampede2 and Lonestar5 systems, Baker has been computationally designing and testing millions of possible proteins that can bind with the SARS-CoV-2 virus, narrowing the list down to 2,000 that show promise. What’s more, he’s developed a platform that evolves potential virus binding proteins both in silico and in the wet lab until a final binding protein is created from scratch that is shown to effectively fight the virus.

Baker is already looking ahead to applying his in silico-to-wet lab design platform to future pandemics beyond COVID-19. "Our goal for the next pandemic will be to have computational methods in place that, coupled with HPC centers like TACC, will be able to generate high affinity inhibitors within weeks of determination of the pathogen genome sequence."

Artificial Intelligence, Real Vaccine

Artificial intelligence (AI) and machine learning (ML) are emerging as important COVID-19-fighting tools.

Computational biologist Arvind Ramanathan of Argonne National Laboratory is working with an extensive network of collaborators involving national laboratories and universities across the U.S. and Europe on techniques that can effectively combine AI with rigorous physics-based modeling. Their methods enable them to comb through billions of potential drugs to find ones that might be effective at treating COVID-19.

Frontera ran millions of simulations to train the ML system to identify good drug candidates.

"The rapid response and engagement we have received from TACC has made a critical difference in our ability to identify new therapeutic options for COVID-19," said Rick Stevens, Argonne's associate laboratory director for Computing, Environment and Life Sciences.

“Supporting the new AI methodologies in this project gives us the chance to use those resources even more effectively,” said Dan Stanzione, TACC’s executive director.

The center devoted more than 30% of its computational cycles to COVID-19 related research through several months in 2020. Much of this, including the AI research, was done in conjunction with the COVID-19 HPC Consortium, a White House effort organized to bring together the Federal government, industry, and academic leaders and provide access to the world’s most powerful HPC resources in support of COVID-19 research.

“The consortium offers researchers an opportunity to collaborate in ways they might not have done before, such as by helping each other get their code up and running more quickly on the processors,” said Kelly Gaither, director of health analytics at TACC. “This encourages scientists from disparate specialties to link up and solve problems in new ways and to think creatively about how to incorporate supercomputers into their research.”

Hurricane Storm Surge

The natural world hasn’t stood still for COVID-19. Elements can still conspire and turn hazards into disasters.

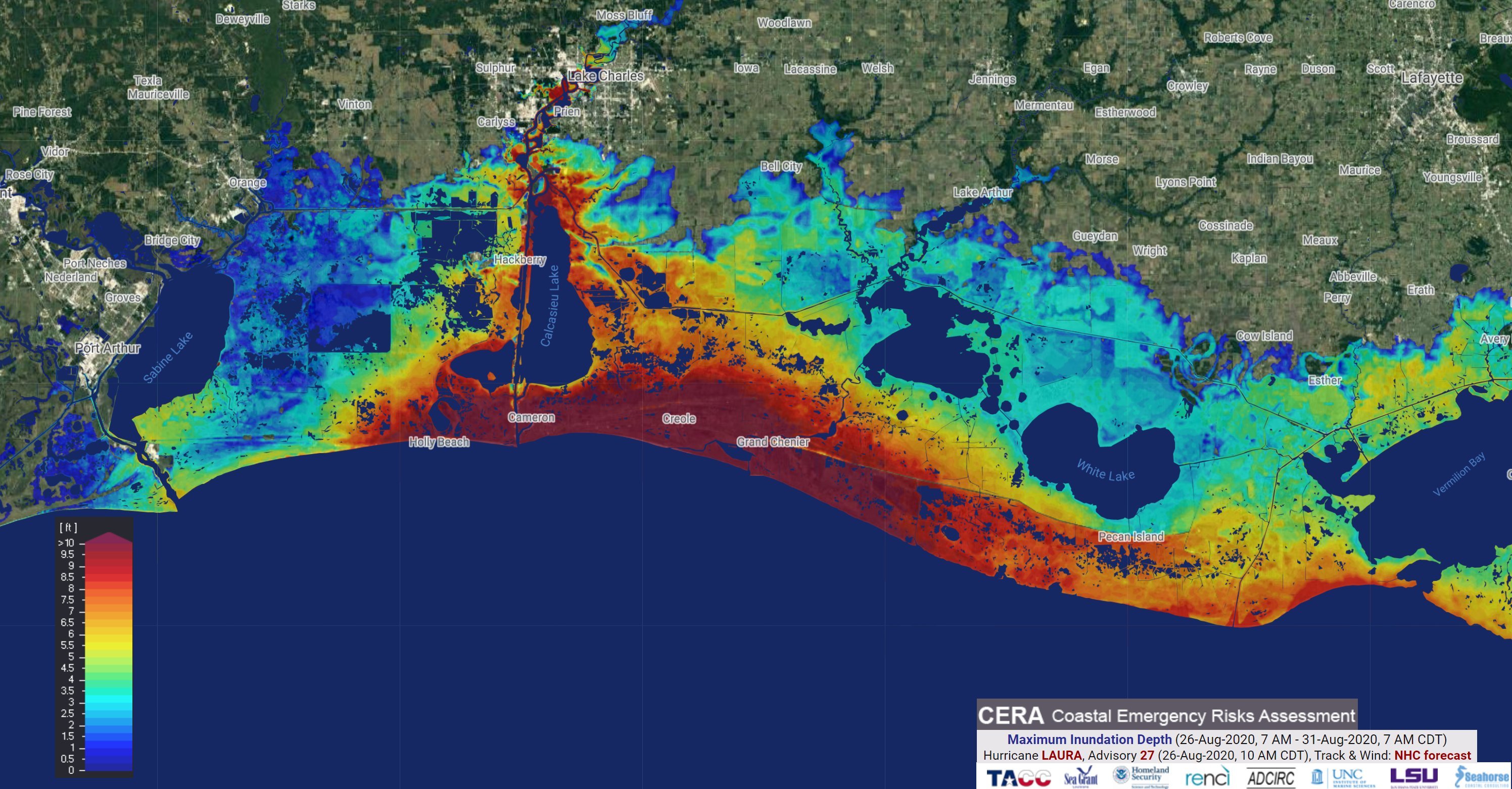

Storm surge is one of the most dangerous and difficult to forecast parts of a hurricane. High winds of more than 70 miles per hour can spray walls of breaking water over 20 feet high and more than a mile inland. Emergency managers need to know the moment-by-moment maximum water level over the coast during a hurricane.

Clint Dawson, chair of the Aerospace Engineering and Engineering Mechanics department at UT Austin, has been working with TACC since 2004 to develop the widely-used ADCIRC storm surge model. ADCIRC is a computational model that simulates processes such as tides, storm surge, and flows within ocean basins.

Whenever a storm emerges in the Gulf of Mexico or the North Atlantic, Dawson and his team spin up storm surge forecasts on Frontera, Stampede2, and Lonestar5, notably predicting days in advance several feet of water inundating Lake Charles from Hurricane Laura in 2020.

ADCIRC takes in forecast data generated every six hours by the National Hurricane Center and outputs storm surge predictions based on each hurricane forecast. Emergency managers throughout the Gulf and Atlantic coasts use the forecasts for transportation and evacuation decisions. First responders use it to determine what roads are safe to reach impacted areas; how high the surge is going to be in that area; and what areas need to be evacuated.

“If we can get people out of harm’s way in real time, we’re saving lives,” Dawson said.

Storm Response

In another example of urgent computing, TACC provides real-time storm simulations by 4 a.m. every day each spring that are used by the NOAA National Severe Storms Laboratory and for other storm-chasing scientists such as research meteorologist Xuguang Wang of the University of Oklahoma.

Wang leads efforts to improve the understanding and prediction of storm dynamics through her Plains Elevated Convection At Night project. PECAN predicts storm conditions such as temperature, pressure, wind, and rainfall.

TACC has also supported the Multiscale data Assimilation and Predictability (MAP) lab also led by Wang to produce more accurate, real time simulations of convection. They describe the vertical transport of heat and moisture in the atmosphere The simulations allow for ensemble forecasting, where sets of numerical data and observed data combine to generate a range of multiple forecasts.

”Understanding storm development and formation needs two indispensable research efforts,” said NSF Program Officer Chungu Lu. “There’s observing and collecting data in the real world during a storm event, and then putting the observational data into some diagnostic or prognostic models.”

Next Big Ones

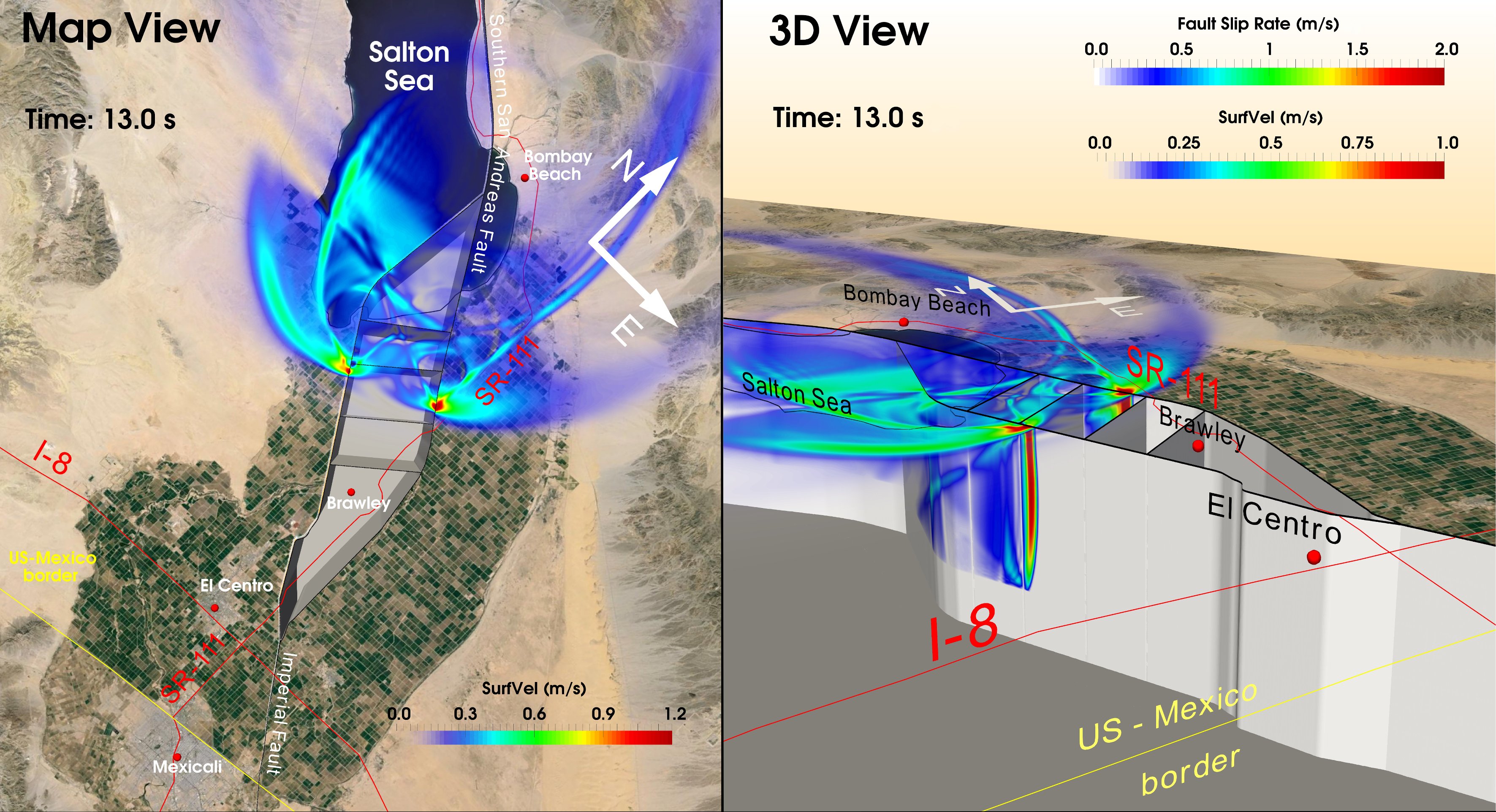

It’s hard not to worry about earthquakes if you live in Southern California. The big concern is the San Andreas Fault, for which the U.S. Geological Survey has estimated a seven percent chance of a magnitude 8.0 earthquake by 2033.

A less well-known risk are ruptures that propagate from one segment of a fault to another forming a chain reaction of multi-fault earthquakes. Some of the world's most powerful earthquakes have involved multiple faults, such as the 2012 magnitude 8.6 Indian Ocean Earthquake.

Research geophysicist Christodoulos Kyriakopoulos of the University of Memphis Center for Earthquake Research and Information has simulated on Stampede1 these complex earthquake ruptures of the Brawley seismic zone, a network of faults in Southern California. His results point to the possibility of a multi-fault earthquake in Southern California, with possibly dire consequences.

"This research has provided us with a new understanding of a complex set of faults in Southern California that have the potential to impact the lives of millions of people in the United States and Mexico,” Kyriakopoulos said.

Concurrently, a team from the Southern California Earthquake Center (SCEC) is working to develop an aftershock forecasting tool that could provide real-time decision-making assistance in the case of a future quake.

Within minutes of an event, they want to be able to run large-scale simulations that provide the best possible prediction of whether, and where, aftershocks may occur.

Systems like Frontera are the best hope of providing this type of advanced warning.

“When you have an earthquake, it changes the state of stress locally and regionally, which changes the probability of ruptures nearby,” said Christine Goulet, executive director for Applied Science at SCEC. “Having access to on-demand computing on Frontera, we could produce guidance on the potential of aftershocks and that would help considerably in the wake of a major earthquake.”

Chemical Weapons and COVID-19

Attacks abroad with chemical weapons such as sarin left researchers at UT San Antonio considering the almost unthinkable — could it happen here?

Research engineer Kiran Bhaganagar, with support from the U.S. Department of the Army, has developed computer models on Stampede2 to simulate the dispersal of chemical gas in a sudden attack.

If we can get people out of harm’s way in real time, we’re saving lives.

Bhaganagar introduced a new protocol that deploys aerial drones and ground-based sensors to gather the local wind data to cut the time of prediction of how a dangerous plume could travel from five days to 30 minutes. She’s working to make it even faster so that officials can safely evacuate citizens under attack.

”I wouldn't have even attempted these research projects if I wasn't able to access TACC supercomputers,” Bhaganagar said. “They're absolutely necessary for developing new turbulence models that can save lives in the future."

Bhaganagar has also extended her plume dispersal research into simulations of the spread of COVID-19 virus particles. In a first for high-fidelity numerical simulations, she’s using TACC’s Stampede2 and Maverick2 to track the infected aerosol plume with complex atmospheric conditions in New York City between March 9 and April 6, 2020 — the epicenter of the pandemic in the U.S. at the time.

Road Ahead

For two decades, TACC has been driven by challenges that were either “too big” — capability computing that focuses the maximum computation on a single problem; or “too many” — solving millions of smaller problems.

These challenges remain integral to TACC’s mission of enabling discoveries that advance science and society through the application of advanced computing technologies. In the face of a potential disaster, however, the problem is “too soon,” and science-based computing becomes urgent.

Thankfully, the technology and expertise needed to fully utilize advanced computing in a time of crisis has grown tremendously. Urgent computing might be an idea whose time has come when the time for finding answers is short.