Powering Discoveries

Aaron Dubrow

Related Articles

The idea was so far-fetched it seemed like science fiction: an observatory encased in a kilometer-sized block of Antarctic ice to track ghostly particles called neutrinos. Benedikt Riedel, global computing manager at IceCube Neutrino Observatory, says it makes perfect sense.

“Constructing a comparable observatory anywhere else would be astronomically expensive,” Riedel explained. “Antarctica’s ice is a great optical material and allows us to sense neutrinos like nowhere else.”

Neutrinos are neutral subatomic particles with hardly any mass that can pass through solid materials at near the speed of light, rarely interacting with normal matter. They were first detected in the 1950s in experiments near nuclear reactors, and later they were found to be created by cosmic rays interacting with our atmosphere. Astrophysicists believe neutrinos are likely ubiquitous and caused by a variety of cosmic events, but they’re extremely difficult to detect.

Importantly, scientists believe neutrinos could hold critical clues to understanding other cosmic phenomena. “Twenty percent of the potentially observable universe is dark to us,” Riedel explained. “That’s mostly because of distances and the age of the universe. High energy light is also hidden. It is absorbed or undergoes transformation that makes it hard to trace back to a source. IceCube reveals a slice of the universe we haven’t yet observed.”

A Full-Sky Sentinel

Most observatories can only look at a small portion of the sky. But because of the nature of neutrinos, IceCube can observe these particles’ flights from any direction, and therefore acts as a full-sky sentinel. For this reason, neutrinos play an important part in multi-messenger astronomy, an approach that combines observations of light, gravitational waves, and particles to understand some of the most extreme events in the universe.

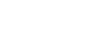

The block of ice at the Amundsen–Scott South Pole Station in Antarctica — up to 100,000-years-old and extremely clear — is instrumented with sensors between 1.5 to 2.5 kilometers below the surface. As neutrinos pass through the ice, some interact with protons and neutrons, producing photons — flashes of light — which are detected by the sensors. The sensors transform these signals, about a handful an hour, into digital data to determine whether they represent a local source (such as the Earth’s atmosphere) or a distant one.

“Based on the analysis, researchers are also able to determine where in the sky the particle came from, its energy, and sometimes, what type of neutrino — electron, muon or tau — it was,” said Jim Madsen, executive director at the Wisconsin IceCube Particle Astrophysics Center.

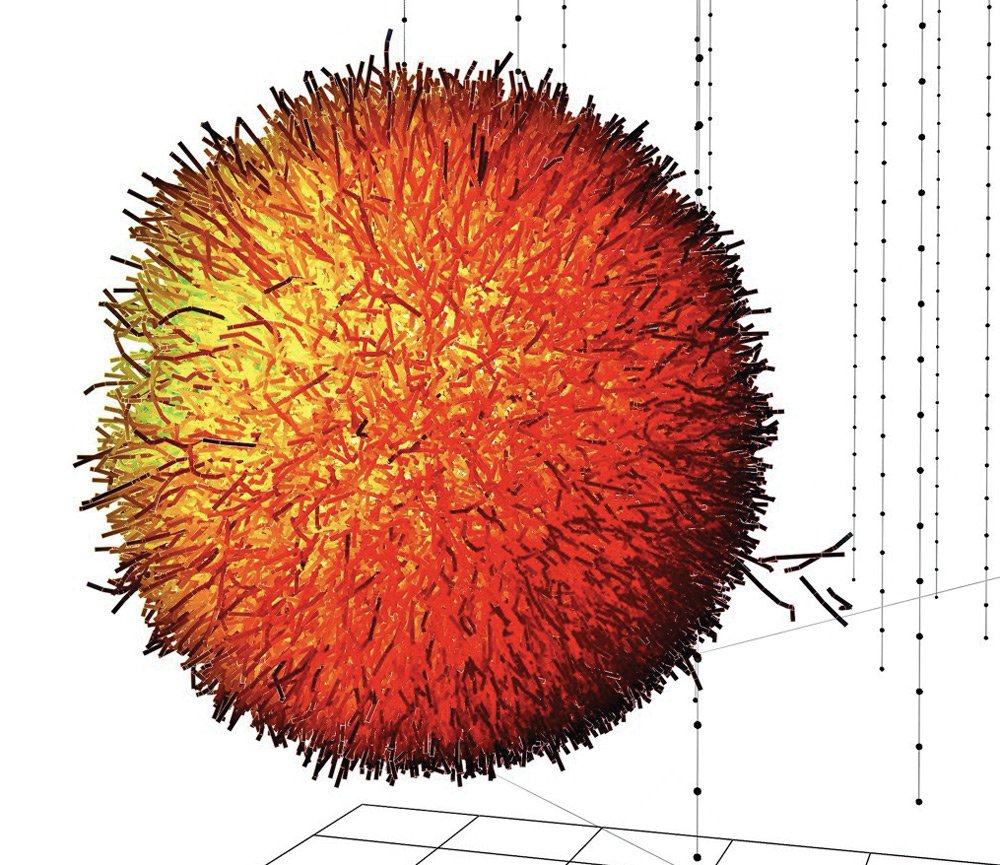

IceCube Discovers Evidence of High-energy Electron Antineutrino

On March 10, 2021, IceCube announced the first-ever detection of a Glashow resonance event, a phenomenon predicted by Nobel laureate physicist Sheldon Glashow in 1960. The Glashow resonance describes the formation of a W− boson — an elementary particle that mediates the weak force — during the interaction of an electron with a high-energy electron antineutrino, the antimatter partner of the neutrino. Its existence is a key prediction for particle physics’ Standard Model, the theory explaining how the basic building blocks of matter interact.

“We need large amounts of computing for short periods of time to do sensitive computing, and big scientific computing centers like TACC help us do our science.”

The signal peaked at an energy of 6.3 petaelectronvolts (quintillion electronvolts). Natural astrophysical phenomena are the only way to produce antineutrinos that reach such energies, since this energy scale is out of reach for current and even future planned particle accelerators. The results, published in Nature, further demonstrated the ability of IceCube to inform fundamental physics.

Neutrino detections require significant computing resources. Detector behavior is modeled in preparation for observations. Background events, such as extra-solar signals created from cosmic ray interactions in the atmosphere, need to be filtered out. And interesting signals, like a needle in a haystack, need to be found and analyzed in the data.

When IceCube detects a signal, “we kick off a calculation to analyze the event that can scale to one million CPUs,” Riedel said. “We don’t have those, so Frontera can give us a portion of that computational power to run a reconstruction or extraction algorithm. We get those type of events about once a month.”

“Large scale simulations of the IceCube facility and the data it creates allow us to rapidly and accurately determine the properties of these neutrinos, which in turn exposes the physics of the most energetic events in the universe,” said Niall Gaffney, TACC Director of Data Intensive Computing. “This is key to validating the fundamental quantum-mechanical physics in environments that cannot be practically replicated on earth.”

Riedel serves as the coordinator for a large community of researchers — as many as 300 by his estimates — who use TACC’s Frontera system, the fastest academic supercomputer in the world. In 2020, IceCube was awarded time on Frontera as part of the Large Scale Community Partnership track, which provides extended allocations of up to three years to support long-lived science experiments. IceCube — which has collected data for 14 years and was recently awarded a grant from the National Science Foundation to expand operations over the next few years — is a premier example of such an experiment.

“Part of the resources from Frontera contributed to the Glashow discovery,” Riedel said. “There’s years of Monte Carlo simulations that went into figuring out that we could do this.”

IceCube uses computing resources from a number of sources, but Frontera is the largest system IceCube physicists use. It handles a large part of the computational needs of the neutrino community, reserving local or cloud resources for urgent analyses, Riedel says.

Today’s astronomers can observe the universe in many different ways, and computing is central to almost all of them.

Astronomers have evolved from just looking up at the sky through the eyepiece of a telescope, to using large scale instruments, and now particle physics and particle observatories, to make discoveries, added Riedel. “With this new paradigm, we need large amounts of computing for short periods of time to do sensitive computing, and big scientific computing centers like TACC help us do our science.”