Feature Stories

Aaron Dubrow and Faith Singer

Forecasting the Future of Climate

Climate modelers explore new approaches giving a clearer picture of Earth’s future

Today’s cutting-edge climate models are very different from those sketched out on paper almost a century ago. And future climate models could be radically different from ones today.

The models today treat the atmosphere as a system governed by a few simple physical laws described as mathematical equations. These laws lead to quantities we can measure — temperature, humidity, pressure, wind, rain, and flowing water. We identify them as characteristics of weather and climate, the long-term patterns of weather.

Capturing the exact interactions of all aspects of climate — every individual raindrop and wind gust — is impossible. Instead, climate models divide the globe up into a grid of millions of separate points that represent local conditions. The equations are solved at each grid point, and the information about the dynamics is communicated to surrounding locations.

This process is repeated time-step by time-step, going from the past — where we have data about the climate and can validate whether the model is reproducing reality as it should — into the future, where scientists rely on the robustness of the model and on predictions about human activity.

Separate models capture physical processes in the atmosphere, oceans, land, and ice-sheets. Researchers combine information from the models that paints an accurate picture of how the interconnected systems will change.

The tried and true way to improve the accuracy of climate models has been to increase the resolution of the model by adding more points to the grid — in essence, zooming in on Earth.

Two leading researchers doing this work in the United States are Ping Chang from Texas A&M University and Gokhan Danabasoglu from the National Center for Atmospheric Research (NCAR).

Their simulations on TACC’s Frontera supercomputer forecasted the Earth’s climate from 1950 to 2050 using a newly configured high-resolution version of the Community Earth System Model developed at NCAR with support from theNational Science Foundation (NSF) and the Department of Energy (DOE).

The simulations were roughly 160 times more detailed than those used for official climate assessments by the Intergovernmental Panel on Climate Change. The increased resolution lets the team track the future evolution of weather extremes and investigate for the first time phenomena such as atmospheric rivers and ocean currents.

“If machine learning high-resolution cloud physics ever succeeded, it would transform everything about how we do climate simulations."

The models also required more than 100 times more computing power than previous ones.

“Large computing systems like Frontera allow us to do this," Chang said.

Their study found that warming temperatures and more CO2 in the atmosphere lead to changes to the Atlantic Meridional Overturning Circulation, a major ocean current that transports heat and salt from the tropics to the polar regions of the Northern Hemisphere.

Christina Patricola of Iowa State University is another researcher pushing the limits of model resolution to help understand extreme weather events.

When Patricola was a graduate student at Cornell University working with leading climate scientist, Kerry Cook, she recalls that her first models had a horizontal resolution of 90 kilometers (km) and were considered state-of-the-art at the time. Her recent models have a resolution of 3 km — 30 times more detailed.

“We’re interested in extreme precipitation totals and hourly rainfall rates,” she said. “We had to go to a high resolution of 3 km to make these predictions. And as we increase resolution, the computational expense goes up.”

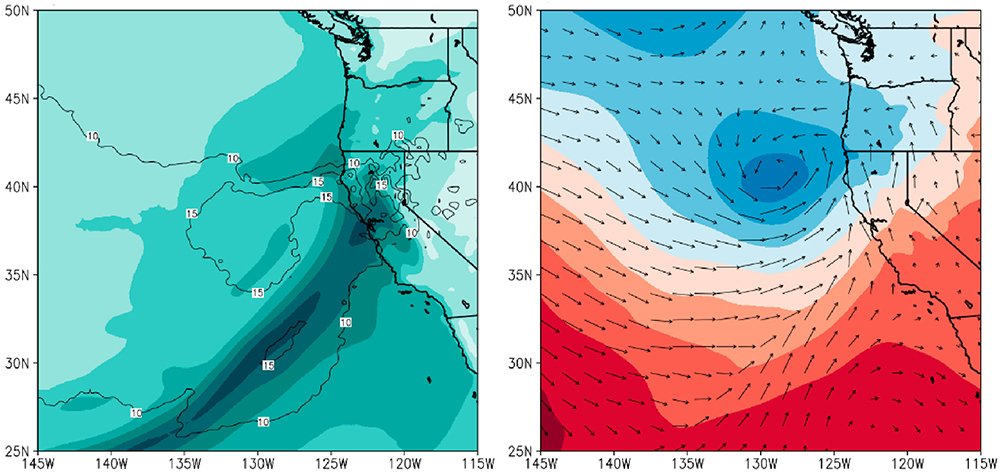

Patricola and her team used TACC’s Stampede2 supercomputer to simulate five of the most powerful storms to hit the San Francisco Bay Area in the past two decades to see how they would change in a warmer climate. She determined that under future conditions, some of these extreme events would deliver almost 40% more rain — even more than is predicted simply by accounting for the ability of air to carry more water in warmer conditions.

Patricola calls these “storyline” experiments: computer models that are meant to be instructive for thinking about how historically-impactful storm events could look in a warmer world.

Focusing on events that were known to disrupt city operations provides a context for understanding the potential impacts of similar events if they occurred under future climate conditions. The research — funded by the City and County of San Francisco — will help the region plan its future infrastructure with mitigation and sustainability in mind.

“Having this level of detail is a game changer,” said Dennis Herrera, general manager of the San Francisco Public Utilities Commission, the lead City agency on the study. “This groundbreaking data will help us develop tools to allow our port, airport, utilities, and the city as a whole to adapt to our changing climate and increasingly extreme storms.”

Filling Gaps in the Climate Equation

Important information is starting to emerge on the effects of climate change on clouds and on permafrost thawing, information that has been out of reach because the data is unwieldy.

Permafrost is ground that remains frozen for two or more years. It makes up about 15% of the surface of the Northern Hemisphere, and it contains large amounts of biomass stored as methane and carbon dioxide, which acts as a carbon sink.

Over the past two decades, much of the Arctic has been mapped with extreme precision by commercial satellites. But the data is so big that traditional analysis and machine learning techniques have failed.

"We brought in AI-based deep learning methods to process and analyze the large amount of Arctic data," said Chandi Witharana, assistant professor of Natural Resources and the Environment at the University of Connecticut.

Witharana and his team first had to hand-annotate the outlines of 50,000 ice wedges — the distinctive feature of permafrost — and classify them as either low-centered or high-centered. They used these annotations to train an AI system that is more than 80% accurate in its automatic identifications.

They then turned to TACC’s Longhorn supercomputer — a system that can perform AI inference tasks rapidly — to analyze the data. At the end of 2021, the team had identified and mapped 1.2 billion ice wedge polygons in the satellite data. In addition to classifying the polygons, the method derives information about the size of the ice-wedge and the size of the troughs.

"Permafrost isn't characterized at these spatial scales in climate models," said Arctic researcher Anna Liljedahl of the Woodwell Climate Research Center. "This study will help us derive a baseline and see how changes are occurring over time."

Clouds, on the other hand, are considered a wildcard in future climate scenarios with scientists unsure about whether future cloud changes will help cool or warm the planet.

“TACC is really unique in providing resources for researchers like us to tackle the fundamental questions of science. Without Frontera, I do not know if we could make simulations like we do. It’s critical."

“Low clouds could dry up and shrink like the ice sheets,” says Michael Pritchard, professor of Earth System science at the University of California Irvine. “Or, they could thicken and become more reflective.” These two scenarios would result in very different future climates.

The problem is that clouds occur on a length- and time-scale that today’s climate models are not close to reproducing. Therefore, they are included in models through approximations.

Pritchard is working to fix this gap by breaking the climate modeling problem into two parts: a coarse-grained, lower-resolution (100 km) planetary model, and many small patches with 100 to 200 meter resolution. The two sets of simulations run independently and then exchange data every 30 minutes to make sure that neither goes off-track nor becomes unrealistic.

“The model has thousands of little micromodels that capture things like realistic shallow cloud formations that only emerge in very high resolution,” Pritchard explained.

Simulating the atmosphere in this way on Frontera provides Pritchard the resolution he needs to capture the physical processes and turbulent eddies involved in cloud formation.

“If those clouds shrink away, like ice sheets will, exposing heat-absorbing darker surfaces, that will amplify global warming and all the hazards that come with it,” Pritchard said.“But if they do the opposite of ice sheets and thicken up to reflect more sunlight, which they could, that is less hazardous. Some have estimated cloud changes as a multi-trillion dollar issue for society.”

Alternative Computational Approaches

Could AI in the form of machine learning and deep learning provide insights that are unavailable through just improvements in model resolution alone?

In a recent paper, Pritchard and lead author Tom Beucler of UC Irvine describe a machine learning approach that successfully predicts atmospheric conditions even in climate regimes it was not trained on, where others have struggled to do so.

This ‘climate invariant’ model incorporates the equations of climate processes into machine learning algorithms. Their study — which used Stampede2, Cheyenne at the National Center for Atmospheric Research, and Expanse at the San Diego Supercomputer Center — showed that machine learning methods can maintain high accuracy across a wide range of climates and geographies.

“I’m interested in seeing how reproducibly and reliably the machine learning approach can succeed in complex settings,” Pritchard said. “If machine learning high-resolution cloud physics ever succeeded, it would transform everything about how we do climate simulations,” he said.

Cascading Climate Impacts and Fixes

A new project led by Ping Chang and Gokhan Danabasoglu will use Frontera to power the models they developed to investigate how changes to the climate and oceans will impact fisheries in the U.S. and around the world.

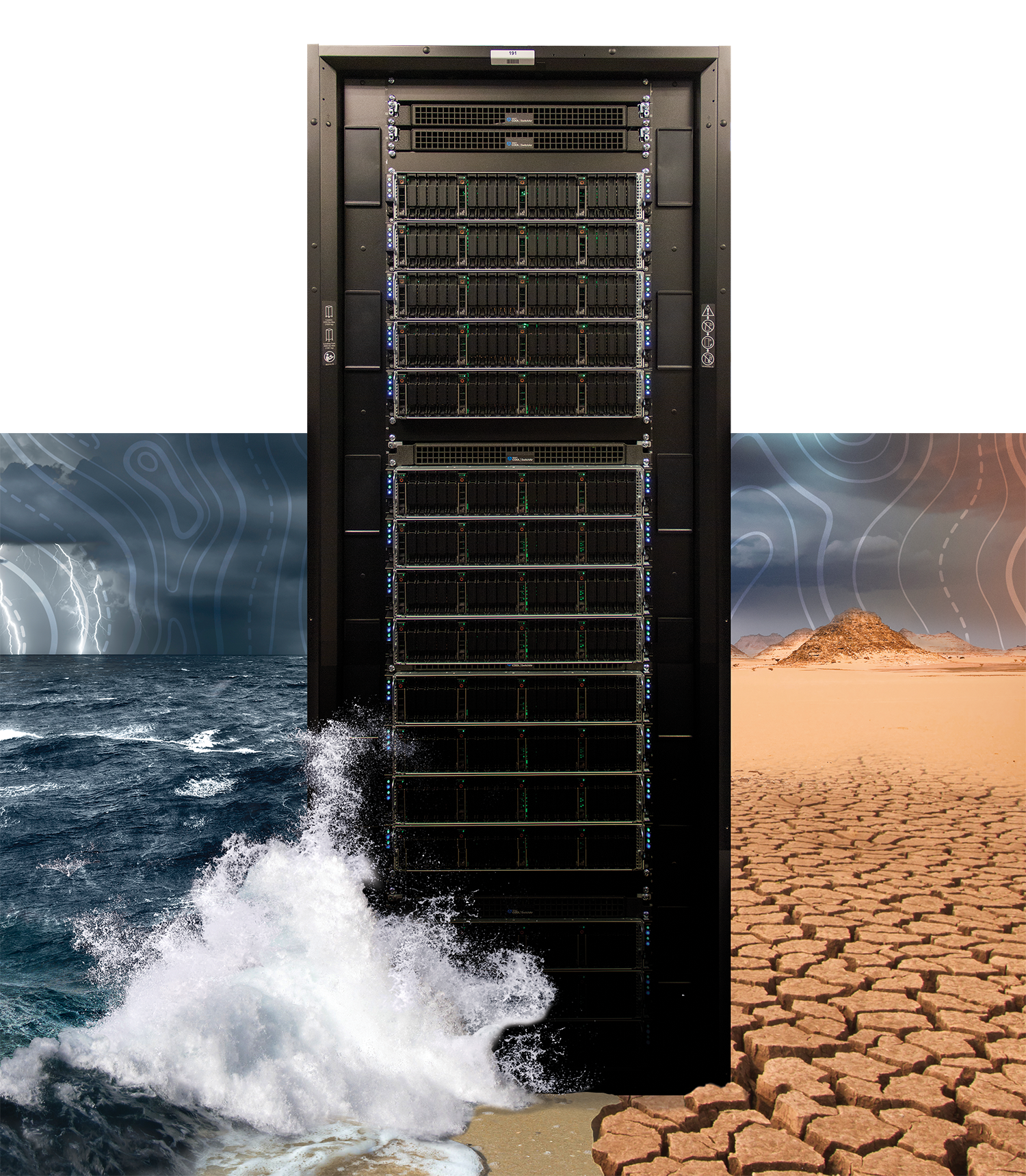

A handful of productive fisheries feed a billion people worldwide. These fisheries occur on the eastern edges of the world’s oceans — off the West Coast of the U.S., the Canary Islands, Peru, Chile, and Angola. At these locations, a process called upwelling brings cold water and nutrients to the surface, which in turn supports vast numbers of larger sea creatures that humans depend on for sustenance.

“High resolution models so far are predicting warmer, not colder temperatures in these waters,” Chang said. “In Chile and Peru, the warming is quite significant — 2-3°C in the worst-case scenario, which represents continuing levels of greenhouse gas emissions.” This can be bad news for upwelling because the warmer waters increase water stratification and hinders nutrients from reaching the surface.

Their simulations are among the first to incorporate ocean biogeochemical and fisheries models into an Earth system model framework at 10 km resolution. This requires even more computing horsepower. Fortunately, Chang and his colleagues were awarded a large allocation of time on Frontera to support the project.

“TACC is really unique in providing resources for researchers like us to tackle the fundamental questions of science,” Chang said. “Without Frontera, I do not know if we could make simulations like we do. It’s critical.”

Educating Future Climate Modelers

One way scientists are meeting the challenges of climate change is expanding the educational opportunities at the nexus of high performance computing and climate science.

In Spring 2022, Patricola taught her first weather and climate modeling course at Iowa State University using supercomputers at TACC to power the students’ models. Students created simulations of tornadic storms and blizzards in the Midwest; the first rainfall in Greenland in recorded history; and Hurricane Maria, which intensified quickly before hitting Puerto Rico.

The class is designed for students with interest in a variety of career paths, including weather prediction and broadcasting, private industry, and scientific research on climate change.

“I’m torn between genuine gratitude for the U.S. national computing infrastructure, which is so incredible at helping us develop and run climate models,” Pritchard said, “and feeling that we need a Manhattan Project level of new federal funding and interagency coordination to actually solve this problem.”

Simulation by simulation, climate scientists are deepening our understanding of the Earth, predicting the changes we will face, and partnering on solutions to address our climate future.