Powering Discoveries

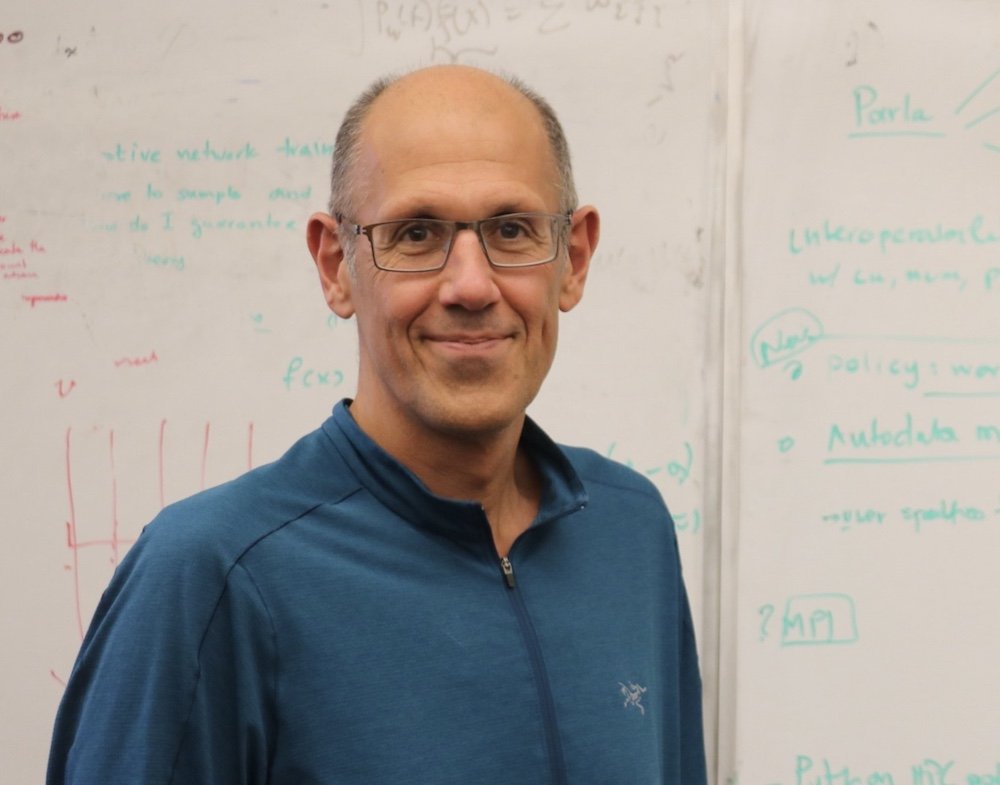

John Holden

Exascale Power

Scientist, HPC leader George Biros looks forward to new era of exascale computing

Many computational scientists begin as mathematicians. Theirs is a community that believes our salvation lies in the universal language of numbers.

Societal problems, particularly those where we must predict future behaviors or outcomes, are best solved using applied mathematical tools — notably a tool known as a partial differential equation (PDE). Such problems include everything from designing new spacecraft to developing novel materials to determining what the climate will look like 500 years from now.

George Biros is one such computational scientist. He holds the W.A. “Tex” Moncrief Chair in Simulation-Based Engineering Sciences at the Oden Institute for Computational Engineering and Sciences at UT Austin.

“The work in our group focuses on physical systems,” Biros said. “PDEs are the mathematical foundation for the majority of physical models. From plasma physics to tumor growth, and from blood flow in capillaries to alloy solidification, PDEs enable predictive simulations.”

"The exposure to innovation in HPC [TACC provides] motivates us all to push the envelope in leadership-scale computation.”

His research touches on interdisciplinary applications in healthcare, defense, fluid dynamics, and additive manufacturing. He also gets under the hood to advance the fundamental mathematical tools underpinning computational science and engineering and high performance computing (HPC).

Biros is excited about the new age of exascale supercomputing, which the HPC community recently entered with the unveiling of the Frontier system at Oak Ridge National Laboratory, capable of more than a billion billion, or one exaflop, calculations per second. (108).

“Exascale computing will dramatically accelerate science discoveries and engineering innovation,” Biros said. “It will enable high resolution simulations that are currently intractable; ensemble simulations for uncertainty quantification; and integration of streaming data, machine learning algorithms, and physics-based simulations in an unprecedented scale.”

In addition to being a top scientist in his field, Biros is a much revered computational expert who has been using supercomputers at TACC to enable discoveries for a decade.

“TACC is of strategic importance for Texas and the nation,” Biros said. “The exposure to innovation in HPC motivates us all to push the envelope in leadership-scale computation.”

It so happens that supercomputers are very good at solving PDEs — a Biros specialty. Although computational models can be realized using alternative mathematical and scientific tools, PDEs are used ubiquitously for modeling physical, biological, and chemical systems.

The increasing power, performance, and storage capacity of HPC facilities like TACC, coupled with more sophisticated modeling techniques, means that advanced computing provides such accuracy that it has moved into almost every area of science imaginable.

Higher Performance Computing

Biros doesn’t just exploit the formidable power of TACC’s systems to find solutions to real world challenges where numbers hold the answers. He’s also finding new ways to improve efficiencies and scale performance and capacity to the next level — exascale computing.

Akin to an interior architect who redesigns and retrofits existing structures to enable greater efficiencies, Biros and his team are working to improve existing algorithms by providing the mathematical upgrades required to enable their application at much larger scales.

What are Partial Differential Equations?

Partial differential equations (PDEs) are essential in fields like physics and engineering as they supply useful mathematical descriptions of fundamental behaviors in a variety of systems — from heat to quantum mechanics.

“People like George, who run several kinds of problems on our machines and publish a lot of important papers in the field of HPC, do a lot of benchmarking as they compare the performance of their algorithms on as many resources as they can,” said Bill Barth, director of Future Technologies at TACC.

As we move into the exascale era, challenges remain.

“The gap between memory bandwidth and computation continues to widen making many existing algorithms prohibitively expensive,” Biros said. “The new architectures require extremely careful tuning and optimization.”

Rapid prototyping will become quite challenging at scale, too, according to Biros. The applications require much tighter integration of massive datasets, data analysis, and simulation software, which require a whole range of new algorithms for inverse problems, optimal control, data assimilation, Bayesian inference, and decisions under uncertainty.

But the two-time Gordon Bell Prize winner (the most prestigious award in the field of HPC) isn’t phased by said challenges one iota.

“We’re currently aiming for the exascale benchmark and we’ll reach it,” Biros concluded. “This is a relentless quest that can never be satisfied. Whatever is the next biggest, that’s what we aim for.”