Feature Stories

Jorge Salazar

Stampede: A Legacy of Open Science

NSF flagship systems move toward 19 years of large-scale computing impact

In 2011, TACC won a grant from the NSF to acquire its new flagship supercomputer dubbed “Stampede.” True to its name, the rush to build Stampede meant that in a few short months TACC’s back parking lot would transform into an 11,000-square-foot datacenter to house the new system.

Concrete would go down in February, with the raised floor and roof up by March, and power transformers in by May. Lots of power. About nine megawatts of capacity, equivalent to what 9,000 households use. Power usage would be regulated in part by a newly constructed water chilling tower. The icy water flow would help cool down the heat generated by Stampede’s circuits, up to 75 percent more efficiently than air cooling a data center.

“We completed the build-out in about nine months, which was record-setting speed for on-campus construction,” said Dan Stanzione, the executive director of TACC and principal investigator of the proposal.

Rooted in Ranger

Stanzione helped put TACC on the supercomputing map with Ranger, the first of the NSF Track-2 systems, a series of $30 million-class computers and shared resources for researchers across the country. Ranger marked the start of the long run up to what would become Stampede3.

Ranger’s deployment in 2008 marked the beginning of the Petascale Era in HPC, where supercomputers would approach a petaFLOPS, a thousand million million floating point operations per second. Ranger would also serve as the largest HPC resource of the NSF TeraGrid (later XSEDE and now ACCESS), a nationwide network of academic HPC centers that provide scientists and researchers shared large-scale computing power and expertise.

"The most significant thing about Ranger was that TACC had the strongest culture of highly competent service to the research community," remarked Daniel Atkins, a professor emeritus of Information at the University of Michigan, Ann Arbor. Atkins was the NSF’s inaugural director of the Office of Cyberinfrastructure at the time of the dedication ceremony for Ranger.

After a successful five-year run as the world’s largest system for open science research, Ranger retired in 2013. It was time to start fresh with new technology that delivered more FLOPS per watt.

“I witnessed the planning, building, and deployment of Stampede,” said former NSF Program Officer Irene Qualters, who is currently with Los Alamos National Laboratory. “You could see through the process that TACC was very ambitious. They were passionate. The team worked very hard. They weren't afraid to be different, but they really had integrity. They were committed to doing things for the right reason, and in the right way, but maybe not the orthodox way.”

NSF Investment in Stampede

TACC split the NSF investment in the original Stampede between proven and more experimental technologies. Intel Xeon E5 (Sandy Bridge) processors powered the base system, augmented by Intel’s Xeon Phi (Knights Corner) processors, the first large-scale deployment of the Many Integrated Core (MIC) product line. The Xeon Phi had 61 cores versus the 16 cores per dual socket server of the E5.

“It seemed like an enormous number of cores at the time,” Stanzione said. “But it’s less than what’s on a typical socket today.” The Lonestar6 system, for example, has 128 cores per node.

Stampede’s base system was four times more capable than Ranger, even without considering the capability of the MIC.

"They were committed to doing things for the right reason, and in the right way, but maybe not the orthodox way."

“I think for many of the researchers that did use the MIC, in a way it future proofed their codes for what's happened in the broad Xeon line today,” Stanzione said. Ultimately, the base Xeon line caught up with the more experimental MICs in core count.

“All of the principles of coding — threading, figuring out how many cores you use for a MPI task, how many you set on a node — we learned from Stampede and Stampede2,” Stanzione said. “It was a struggle to get everybody to use MICs at the time, but it was the direction things were going and we continued to push people to look at what future directions might look like without taking away that base capability to do science.”

Stampede’s 10 petaFLOPS capability launched it into the #7 spot of the fastest supercomputers on the November 2012 Top500 list. More than 12,000 users participated in 3,600 projects over Stampede’s life with tens of thousands more users on portals through the NSF’s CyVerse project, DesignSafe initiative, and others.

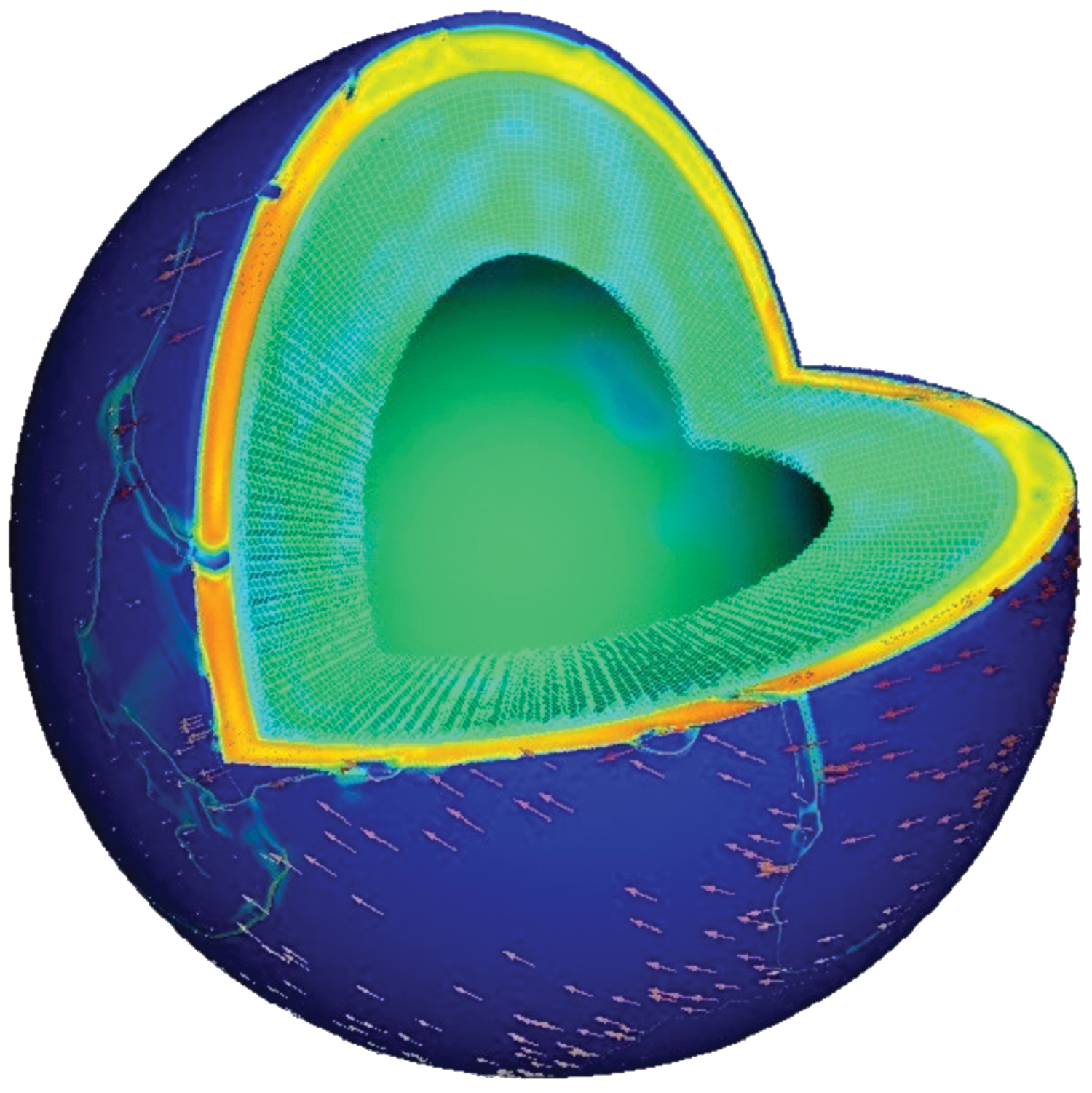

The original system contributed to thousands of scientific innovations including the NSF-funded Laser Interferometer Gravitational-Wave Observatory (LIGO) measurement of gravitational waves; determining how the first stars in the universe formed; and contributing to the Gordon Bell prize-winning simulations led by Omar Ghattas of UT Austin’s Oden Institute in 2015 providing insights into the driving mechanisms of plate tectonics.

Road to Stampede 1.5

The original Intel Knights Corner MIC cards on Stampede paved the way for an upgrade in 2016 to the second generation of MIC technology called Knights Landing (KNL). But unlike Knights Corner, which sat in a slot as a separate PCI card, the KNL was a self-hosted board. That meant that instead of replacing some of the cards for the upgrade, TACC needed to acquire new computers, RAM, network — new everything.

“We used 500 nodes of KNL as a preview for what we were going to do with Stampede2, where we split one part into 4,200 nodes with Knights Landing processors. Intel Skylake processors would fill out the remaining 1,736 nodes on Stampede2,” Stanzione said.

The Skylake cores would push Stampede2’s per socket core count on its base system to 28 with 68 cores being usable on the KNL. This ended up being a 2-to-1 difference in core count from Stampede to Stampede2, versus the 4-to-1 difference from Ranger to Stampede.

“They were already starting to converge,” said Stanzione, emphasizing that because they were both in the same generation of silicon process, two Skylake sockets kept up with about one KNL node. The Skylakes became more popular because researchers could do less coding work to extract performance from them.

What the users saw less of is that Skylake was a dual socket server versus a one socket KNL server. Skylakes needed more power to get the same performance as KNL. More power was a tradeoff for user convenience.

“A big part of future tradeoffs was figuring out how much clock rate we were willing to trade for more concurrency to use all the transistors that could be put on a chip. And how big of a chip did we want to get performance,” Stanzione said.

TACC Wins Stampede2

In 2016, TACC won the NSF award for Stampede2. The Intel Skylake and KNL nodes provided a combined capacity of 20 petaFLOPS, which was 10 times faster than Stampede. Stampede2 went into production in 2017 with a debut spot of #12 on the Top500 list.

“Most users saw a lot more capacity when Stampede2 went online, which I think explained its popularity over the six years we ran it,” Stanzione said. Over 12 million jobs ran on Stampede2, serving more than 10,000 individual researchers as the scientific computing workhorse for NSF’s XSEDE program.

“It was super power efficient in ways that even our current systems aren't anymore,” Stanzione said. By getting the bulk of users to transition their codes to the KNL processors, precious power was saved. Stampede2 was popular with users and consistently over-requested through its time in service.

In terms of impactful science, Stampede2 helped Event Horizon Telescope researchers form the first images of a black hole, which made international headlines. Simulations were used to lay the groundwork for the black hole imaging by helping to determine the theoretical underpinnings to interpret the black hole mass, its underlying structure, and orientations of the black hole and its environment.

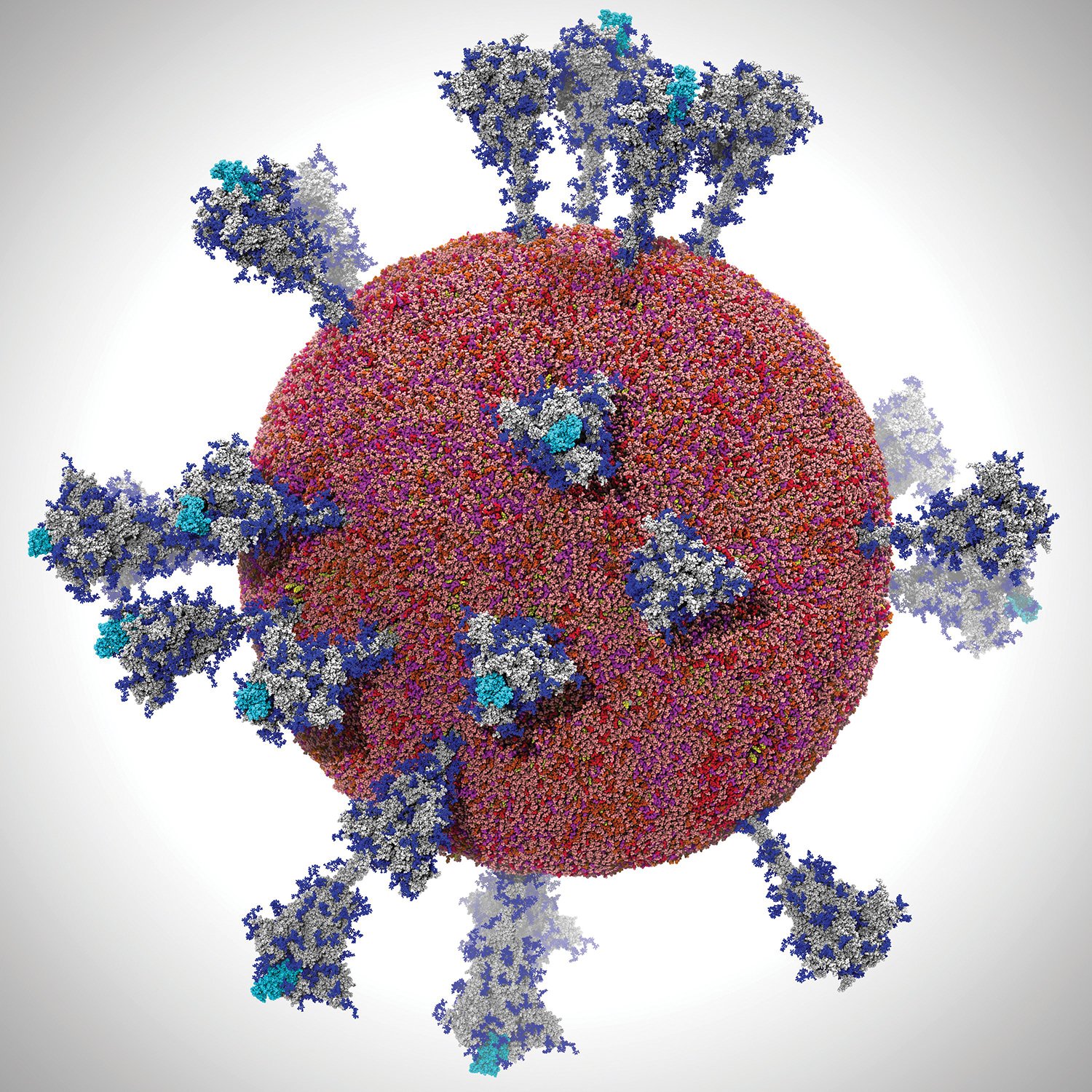

What’s more, in June 2023 the NSF-funded North American Nanohertz Observatory for Gravitational Waves (NANOGrav) Physics Frontiers Center announced they had used Stampede2 to find evidence for gravitational waves that oscillate with periods of years to decades.

"The NSF NANOGrav team created, in essence, a galaxy-wide detector revealing the gravitational waves that permeate our universe," said NSF Director Sethuraman Panchanathan. "The collaboration involving research institutions across the U.S. shows that world-class scientific innovation can, should, and does reach every part of our nation.”

Urgent Computing

When disaster struck, Stampede2 helped deliver critical information quickly to scientists and made a real impact to people in harm’s way. Urgent computing — generating reliable answers with supercomputers when time is running out — was a priority in Stampede2’s workload.

Clint Dawson, chair of the Aerospace Engineering and Engineering Mechanics department at UT Austin, used Stampede2 in storm surge models that predicted days in advance several feet of water inundating Lake Charles from Hurricane Laura in 2020. First responders used these simulations to determine what roads were safe to reach impacted areas; how high the surge was going to be in that area; and what areas needed to be evacuated.

Stampede2 also provided daily, real-time storm simulations to the NOAA National Severe Storms Laboratory, which helped scientists generate diagnostic or prognostic models based on real-world storm data.

During the height of the COVID-19 pandemic, TACC devoted more than 30 percent of its computational cycles — much of it on Stampede2 — to COVID-19 related research by the White House-led COVID-19 HPC Consortium.

“Stampede2 and the whole line of Stampede systems have been remarkably good investments,” Stanzione said. “It was an effective use of taxpayer funds in terms of getting all kinds of science done that led to all kinds of innovations that both now and in decades to come will likely have long term benefits to society.”

Stampede2 reached the end of its lifespan in November 2023.

New Era—Stampede3

A new system will continue Stampede’s legacy of open science. Stampede3 enters full production in early 2024 and is expected to serve the open science community through 2029. It will add four petaFLOPS of capability to the open science community through the NSF ACCESS initiative.

The context for NSF-funded supercomputing centers has changed — the 2020s mark an era of more, smaller, and faster systems compared to systems that served as workhorses for the majority of NSF-allocated scientific computation.

“It gives us an opportunity to leverage some of those investments,” Stanzione said.

Stampede3 will repurpose Stampede2's Intel Skylake nodes and newer Icelake nodes from a 2022 upgrade. They will continue to handle smaller, throughput computing jobs. “We’re focusing the big parallel jobs on Stampede3’s new hardware,” he added, which includes 560 Intel Sapphire Rapids processors with high bandwidth memory (HBM)-enabled nodes, adding nearly 63,000 cores to its capability.

TACC is also moving away from the DIMM structure in which there are external memory modules outside the chip, and the system has to go across the pins and the motherboard to access memory. Instead, the HBM-enabled node has memory dies that are stacked directly on the chip and inside the processor package. The technology was pioneered for GPU workloads and is now used with general processors starting with this generation of the Intel Xeon processor.

Stampede3 will also provide needed CPU computing resources and open avenues of access for emerging communities.

“When we add the HBM for the same code running on the same processor, we expect some of them to double in performance,” Stanzione added. “We're excited about that.”

Other Stampede3 features include a new GPU subsystem, which adds 40 new Intel Data Center Ponte Vecchio processors for AI/ML and other GPU-friendly applications. Plus, a new Omni-Path Fabric 400 gigabit/second networking technology networking, effectively quadrupling network bandwidth.

“The Stampede3 system maintains, builds upon, and significantly extends the record of accomplishments demonstrated in the Stampede portfolio since 2013, including to provide for uninterrupted, continuing open science investments in critical applications today by thousands of researchers, educators, and students nationwide,” said Robert Chadduck, the program director for NSF’s Office of Advanced Cyberinfrastructure. “Stampede3 will also provide needed CPU computing resources and open avenues of access for emerging communities to apply the power of advanced computing in new domains.”

With the addition of Stampede3, these systems will have pushed forward the leading edge of the nation’s large-scale scientific computing for nearly two decades. The research and discoveries enabled by Stampede have sparked innovations and helped maintain national security and economic competitiveness. Its legacy will continue well into the future.