Feature Stories

Jorge Salazar

AI at Scale

Science reaches for new heights with TACC's game-changing systems

Artificial Intelligence in 2024 needs no introduction.

During the past decade, AI has done what it could not do before—digest and understand natural human language, solve math problems, and identify objects in images.

Decades of advances in HPC have produced the hardware and software innovations that laid the foundation for the state-of-the-art AI systems of today.

“The release of Chat GPT was the sputnik moment of the development of AI,” said TACC Executive Director Dan Stanzione.

Attention, Language, and Technology

The turning point came in 2018 with the publication by Google Research of “Attention is All You Need.” It proposed the Transformer network architecture, which large language models (LLMs) use to evaluate the relevance and context of each word to other words in a sentence. It saves steps in neural nets and makes it easier to scale up the models.

Companies like Google and Meta need thousands of processors running in parallel to train a LLM, enabled in large part by HPC advances in fast network technologies such as InfiniBand. TACC’s Frontera, the fastest supercomputer in U.S. academia, uses InfiniBand to route messages between its computer servers and data storage.

The state-of-the-art in AI also relies on the software standards that have grown out of the HPC community—tools and libraries such as the Message Passing Interface, a communication protocol used for running large parallel applications.

Merging Lanes

“From a hardware and infrastructure perspective, if you’re good at doing HPC infrastructure for science, you’re probably good at doing AI infrastructure for the cloud. The distinct lanes are merging,” Stanzione said.

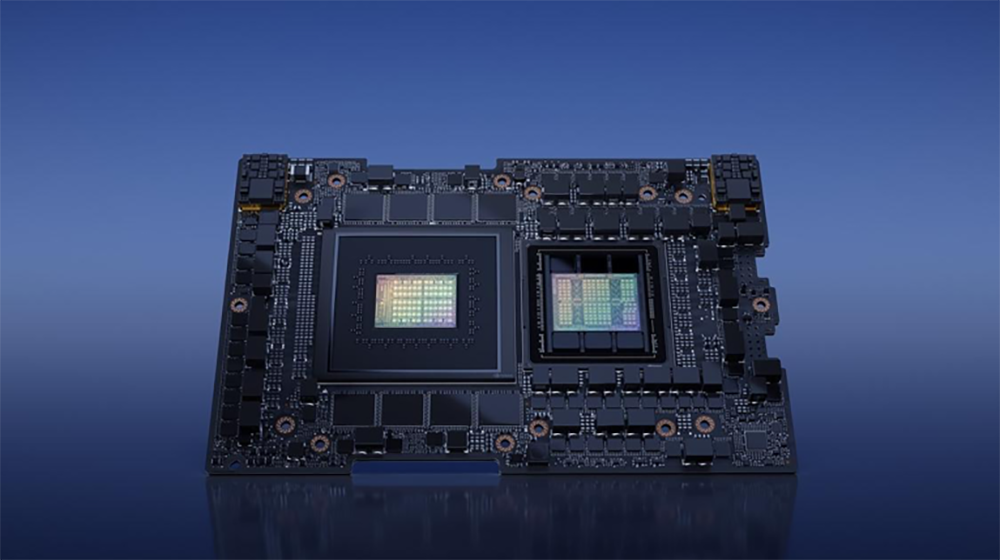

The main difference between the cyberinfrastructure needs of AI and HPC lies in the processors used to complete each workload. AI systems are powered almost exclusively by graphics processing units (GPUs), which excel at low precision matrix operations. HPC systems use a mix of central processing units (CPUs) and GPUs—much higher precision is needed for exacting numerical accuracy and range.

Most scientific code has been written for higher precision HPC systems. But scientists are increasingly using mixed precision to take advantage of both worlds, seeking power efficiency and speed from low precision hardware and leveraging higher precision hardware where more accuracy is needed. The power savings from mixed precision on GPUs is huge—about five times higher than CPUs.

Big Step into AI

TACC has adapted and launched three systems with AI-capable hardware that support mixed precision computing.

Launched in 2021, TACC’s Lonestar6 system features NVIDIA Ampere A100 GPUs to support machine learning workflows and other GPU-enabled applications along with traditional CPUs for higher-precision workloads.

"If you’re good at doing HPC infrastructure for science, you’re probably good at doing AI infrastructure for the cloud."

Launched in 2023, Stampede3 is the latest iteration of the nation’s workhorse in the NSF ACCESS program. In addition to traditional CPUs, it features the Ponte Vecchio Intel Data Center GPU Max 1550. A July 2024 NSF supplement added 24 NVIDIA GH100’s (the Grace Hopper “Superchip”) to beef up Stampede3’s GPU hardware.

Launched in 2024, Vista is TACC’s first AI-focused supercomputer for the open science community. Most of Vista’s compute nodes use the NVIDIA GH100 Grace Hopper Superchip with tight coupling of an Arm-based NVIDIA Grace CPU with a Hopper GPU.

“Vista will ramp up our capabilities to support future national research resources in AI and will serve as our gateway to the U.S. National Science Foundation Leadership-Class Computing Facility (NSF LCCF) system, Horizon,” Stanzione said.

National Artificial Intelligence Research Resource (NAIRR)

The NSF chose TACC to participate in the National Artificial Intelligence Research Resource (NAIRR) pilot envisioned as a widely accessible, national cyberinfrastructure that will advance and accelerate the AI R&D environment and fuel AI discovery and innovation.

“TACC is home to the Frontera, Lonestar6, and Stampede3 systems, which are providing initial resources to the NAIRR pilot,” said Katie Antypas, director of the NSF Office of Advanced Cyberinfrastructure.

Building on NAIRR, which is a White House Executive Order, is the launch of the Trillion Parameter Consortium, a global consortium of scientists building large-scale, trustworthy, and reliable AI systems for scientific discovery, of which TACC is a founding partner.

“We’re using this consortium to grow a community around the idea of using the world’s fastest supercomputers combined with the large amount of scientific data to make progress on creating large language models for science and engineering,” said Rick Stevens, associate laboratory director of Computing, Environment and Life Sciences at Argonne National Lab.

Stevens pointed to the use of AI in identifying knowledge gaps in the overwhelming number of scientific publications. LLM’s can help comb through studies and target key information about a gene—how it is regulated, research related to it, and even suggest new experiments.

The Year of AI

At UT Austin, President Jay Hartzell declared 2024 to be the “Year of AI,” an initiative to attract new researchers and spur creative exploration that will shape the future of the field. TACC plays a prominent role by offering Texas researchers supercomputer access through the newly launched Center for Generative AI (GenAI).

“GenAI worked with TACC in building Vista with the goal of creating open models and open datasets for four focus areas: biosciences, health care, computer vision, and natural language processing,” said GenAI Director Alex Dimakis.

“TACC has world-class expertise in setting up and maintaining the hardware and software stack for managing massive-scale clusters. This is a unique strength we have at UT Austin,” he added.

On the Horizon

In July 2024, TACC announced the start of construction for Horizon, the new supercomputer that will be part of the NSF LCCF.

Horizon will provide a 10x performance improvement over the current NSF leadership-class computing system, Frontera. For AI applications, the leap forward will be even larger, with more than 100x improvement over Frontera.

“This facility [NSF LCCF] will provide the computational resources necessary to address some of the most pressing challenges of our time.”

“The LCCF represents a pivotal step forward in our mission to support transformative research across all fields of science and engineering,” said NSF Director Sethuraman Panchanathan. “This facility will provide the computational resources necessary to address some of the most pressing challenges of our time, enabling researchers to push the boundaries of what is possible.”

Expected to begin operations in 2026, Horizon will include a significant investment in specialized accelerators to enable state-of-the-art AI research and more general-purpose processors to support the diverse needs for simulation-based inquiry across all scientific disciplines.

“Back in 2006 when Apple released the iPhone, it revolutionized how everyone interacted,” Stanzione said. “Technology changes the world in ways you don’t expect. We’re going to see a similar phenomenon with AI. It’s an incredibly powerful tool.”

AI in Action

Local Climate

Officials at the 2024 Paris Olympics relied on AI in daily weather forecasts to fine-tune event scheduling for 15 million visitors. Scientists at the UT Jackson School of Geosciences and the Cockrell School of Engineering used TACC’s Lonestar6 and Vista supercomputers to resolve global weather models to a neighborhood scale.

Natural Disasters

Civil engineers are collaborating with AI experts in the NSF-funded Chishiki-AI project to bring ground truth to AI models in natural hazards such as floods, earthquakes, and hurricanes. TACC is a key partner with NHERI DesignSafe in the project to offer workshops and hackathons for developing AI models and scaling them up on Lonestar6, Frontera, and Stampede3.

Urban Planning

“Convolutional neural networks don’t always identify traffic signs in low income neighborhoods where there’s more graffiti or shadows from high rise buildings, which could have impact in terms of rich versus poor neighborhoods,” said Krishna Kumar, principal investigator of Chishiki-AI and a UT Austin assistant professor in the Cockrell School of Engineering.

Protein Folding

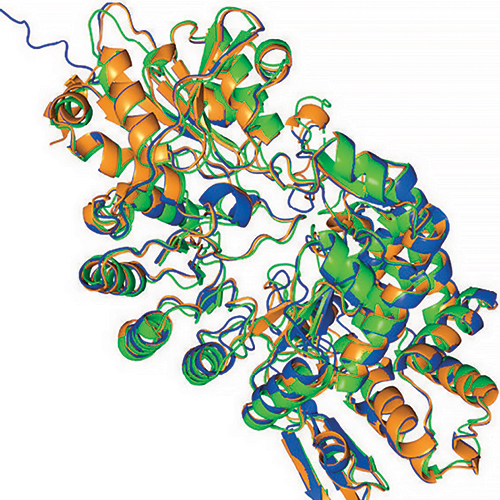

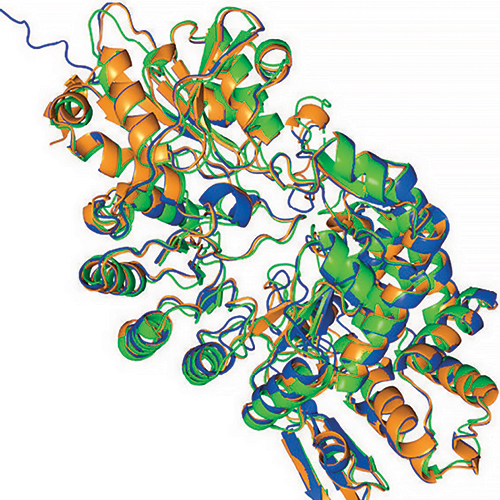

OpenFold, an open source software tool used to predict protein structures, was developed using TACC’s Frontera and Lonestar6. One of the first applications of OpenFold came from Meta, which recently released an atlas of more than 600 million proteins from microbes that had not yet been characterized.

“They used OpenFold to integrate a protein language model similar to ChatGPT, but where the language is the amino acids that make up proteins,” said Nazim Bouatta, a developer of OpenFold and senior research fellow at Harvard Medical School.

“We used TACC resources to deploy machine learning and AI at the scales we needed,” Bouatta said. “Supercomputers are the microscope of the modern era for biology and drug discovery.”

AI in Action

Local Climate

Officials at the 2024 Paris Olympics relied on AI in daily weather forecasts to fine-tune event scheduling for 15 million visitors. Scientists at the UT Jackson School of Geosciences and the Cockrell School of Engineering used TACC’s Lonestar6 and Vista supercomputers to resolve global weather models to a neighborhood scale.

Natural Disasters

Civil engineers are collaborating with AI experts in the NSF-funded Chishiki-AI project to bring ground truth to AI models in natural hazards such as floods, earthquakes, and hurricanes. TACC is a key partner with NHERI DesignSafe in the project to offer workshops and hackathons for developing AI models and scaling them up on Lonestar6, Frontera, and Stampede3.

Urban Planning

“Convolutional neural networks don’t always identify traffic signs in low income neighborhoods where there’s more graffiti or shadows from high rise buildings, which could have impact in terms of rich versus poor neighborhoods,” said Krishna Kumar, principal investigator of Chishiki-AI and a UT Austin assistant professor in the Cockrell School of Engineering.

Protein Folding

OpenFold, an open source software tool used to predict protein structures, was developed using TACC’s Frontera and Lonestar6. One of the first applications of OpenFold came from Meta, which recently released an atlas of more than 600 million proteins from microbes that had not yet been characterized.

“They used OpenFold to integrate a protein language model similar to ChatGPT, but where the language is the amino acids that make up proteins,” said Nazim Bouatta, a developer of OpenFold and senior research fellow at Harvard Medical School.

“We used TACC resources to deploy machine learning and AI at the scales we needed,” Bouatta said. “Supercomputers are the microscope of the modern era for biology and drug discovery.”