Feature Stories

Aaron Dubrow

Related Articles

Deep Learning in Deep Space

AI, supercomputing, and big data power new space discoveries

Throughout history, astronomers have interpreted the cosmos with their eyes, minds, mathematics, and the technologies available to them. Today, that means massive telescopes monitoring the evolution of an exploding star; detectors noting the passing of invisible gravitational waves; deep space satellites mapping distant galaxies; and rovers exploring the surface of Mars.

Advances in observational technologies have carried with them, like dust trailing a comet, a stream of data from outer space — datasets that exceed the capabilities of our minds to examine and interpret.

In recent years, artificial intelligence, or AI, in the form of machine and deep learning has turned the data deluge to our advantage — using digital data to train ‘smart’ systems that can glean insights, find meaningful signals, and improve our decision-making.

Because of the diverse nature of astronomical data, it took a few years for the AI revolution to seep into astronomy and astrophysics. But today, pioneering researchers are finding ways to use machine and deep learning to expand the limits of what’s possible when it comes to studying, interpreting, and even exploring outer space.

Just as astronomers have begun using AI for science, advanced computing centers like TACC have adapted their computing resources to enable this type of research. A handful of projects using TACC systems show the potential of applying AI to astrophysics and space science.

From Data Overload to Discovery

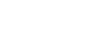

In June 2020, astrophysicists from Caltech announced the discovery of Nyx, a new collection of 250 stars that were born elsewhere and merged with the Milky Way eons ago. “These results strongly favour the interpretation that Nyx is the remnant of a disrupted dwarf galaxy,” the authors wrote in Nature Astronomy.

"Galaxies form by swallowing other galaxies," said Lina Necib, a post-doctoral candidate at Caltech and lead author on the study. "We've assumed that the Milky Way had a quiet merger history, and for a while it was concerning how quiet it was because our simulations show a lot of mergers. Now, we understand it wasn't as quiet as it seemed.”

The discovery of Nyx, and other recent evidence of galactic mergers, suggests astronomers may have been missing an important part of the Milky Way’s evolution.

“It's very powerful to have all these tools, data, and simulations,” Necib said. “All of them have to be used at once to disentangle this problem. We're at the beginning stages of being able to really understand the formation of the Milky way."

The discovery leveraged some of the largest computer models of galaxies ever attempted, known as the FIRE (Feedback In Realistic Environments) simulations, which were enabled in part by TACC supercomputers. Starting from the virtual equivalent of the beginning of time, the simulations produce galaxies that look and behave much like our own.

The researchers used these simulations to train a deep learning model that can differentiate stars born in a galaxy from those that migrated there. They then turned their AI on data from the Gaia space observatory, launched in 2013 by the European Space Agency, to create a three-dimensional map of one billion stars in the Milky Way.

The model identified several known features in the Milky Way including the Helmi stream — another known dwarf galaxy that merged with the Milky Way and was discovered in 1999. It then identified a previously unknown structure: a specific cluster of 250 stars, rotating with the Milky Way's disk, but moving toward the center of the galaxy more rapidly than the other stars in the disk.

Necib named the stream Nyx, the Greek goddess of the night.

“This particular structure is very interesting because it would have been difficult to see without machine learning,” she said. “Everything about this project is computationally very intensive and would not be possible without large-scale computing.”

The Gaia space observatory’s next data release in 2021 will contain additional information about 100 million stars in the catalogue, making it likely that more discoveries of clusters born outside the galaxy will be made.

"When the Gaia mission started, astronomers knew it was one of the largest datasets that they were going to get, with lots to be excited about," Necib said. "But we needed to evolve our techniques to adapt to the dataset. If we didn't change or update our methods, we'd be missing out on physics that are in our dataset.”

Expanding the Limits of the Possible

NASA’s Mars Rovers have been one of the great scientific and space successes of the past two decades. Four generations of rovers have traversed the red planet gathering scientific data, sending back evocative photographs, and surviving incredibly harsh conditions — all using on-board computers less powerful than an iPhone 1.

While a major achievement, these missions have only scratched the surface (literally and figuratively) of the planet and its geology, geography, and atmosphere. This is partly due to the speed of the rovers themselves, and partly to the amount of down time spent waiting for decisions to be made back on Earth and communicated back.

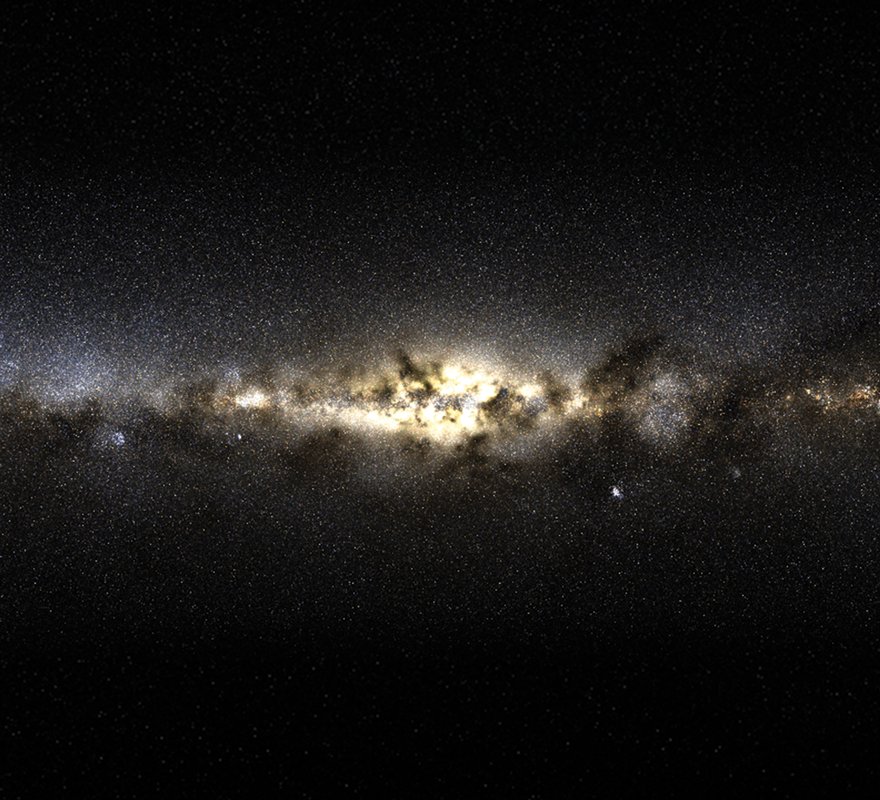

Researchers from NASA’s Jet Propulsion Laboratory (JPL) are working to design future rovers with more autonomy in order to improve the mission’s science gathering abilities. They have been testing a new paradigm that leverages deep learning, trained on high performance computing systems, to allow the rovers to make many decisions without having to phone home: saving power and enabling more science.

“Terrestrial high performance computing has enabled incredible breakthroughs in autonomous vehicle navigation, machine learning, and data analysis for Earth-based applications,” the team wrote in a paper presented at the IEEE Aerospace Conference in March 2020. “The main roadblock to a Mars exploration rollout of such advances is that the best computers are on Earth, while the most valuable data is located on Mars.”

The Machine learning-based Analytics for Autonomous Rover Systems (MAARS) program — which started three years ago and concludes this year — encompasses a range of areas where artificial intelligence is being applied to rovers. The project was a finalist for the 2020 NASA Software of the Year Award.

Training deep learning models on the Maverick2 supercomputer at TACC, as well as on Amazon Web Services and JPL clusters, the team developed two novel capabilities for future Mars rovers: Energy-Optimal Autonomous Navigation and Drive-By Science.

“For rovers, energy is very important,” said Masahiro (Hiro) Ono, group lead of the Robotic Surface Mobility Group at JPL. “There’s no paved highway on Mars. The drivability varies substantially based on the terrain — for instance beach versus bedrock. Coming up with a path with all of these constraints is complicated.”

Future rovers will carry more powerful computer chips, but a new paradigm is also required to plan energy-efficient routes.

“We use a supercomputer on the ground, where we have ‘infinite’ computational resources like those at TACC, to develop a plan where a policy is: if X, then do this; if Y, then do that,” Ono explained. “We’ll basically make a huge to-do list and send gigabytes of data to the rover, compressing it in huge tables. Then we’ll use the increased power of the rover to decompress the policy and execute it.”

The ability to travel much farther will be a necessity for future Mars rovers. An example is the Sample Fetch Rover, proposed to be developed by the European Space Agency and launched in the late 2020s. Its main task will be to retrieve samples dug up by the Mars 2020 rover.

“Those rovers in a period of years would have to drive 10 times further than previous rovers to collect all the samples and get them to a rendezvous site,” said Chris Mattmann, division manager for the Artificial Intelligence, Analytics and Innovative Development Organization at JPL. “We’ll need to be smarter about the way we drive and use energy.”

Another paradigm shift involves the way rovers collect information about Mars, transmit it to Earth, and make scientific discoveries.

Current Mars missions use tens of images each Martian day to decide what to do the next day, according to Mattmann. “But what if in the future we could use one million image captions instead? That's the core tenet of Drive-By Science. If the rover can return text labels and captions that were scientifically validated, our mission team would have a lot more to go on.”

"The main roadblock to a Mars exploration rollout of [autonomous navigation] is that the best computers are on Earth, while the most valuable data is located on Mars."

Mattmann and the team adapted Google’s Show and Tell software — a neural image caption generator first launched in 2014 — for the rover missions. The algorithm takes in images and spits out human-readable captions. These include basic but critical information like how many rocks are nearby and how far away they are, and properties like the vein structure in outcrops near bedrock — “the types of science knowledge that we currently use images for to decide what’s interesting,” Mattmann said. He calls this, “Interplanetary Google Search.”

Over the past few years, planetary geologists have annotated and curated Mars-specific images. Using Maverick2, the team trained, validated, and optimized the model based on 6,700 labels created by those experts.

“We can use the one million captions to find 100 more important things,” Mattmann said. “Using search and information retrieval capabilities, we can prioritize targets. Humans are still in the loop, but they're getting much more information and are able to search it a lot faster.”

Timely Responses to Signals in the Sky

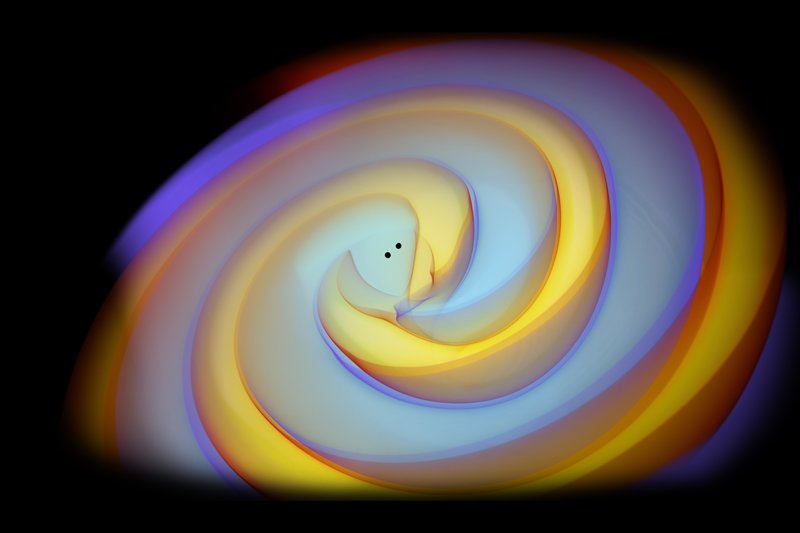

The first detection of gravitational waves from LIGO, the Laser Interferometer Gravitational-Wave Observatory, in 2015 opened a new window to the cosmos. LIGO has captured dozens more signals in recent years. But gravitational wave data, by itself, only reveals so much about the behavior of black holes, neutron stars, and quasars.

Ideally, other forms of detection would help bring even more meaning to these signals. That’s the goal of multi-messenger astrophysics — an approach that combines observations of light, gravitational waves, and particles to understand some of the most extreme events in the Universe.

The observation of gravitational waves and light from the collision of two neutron stars in 2017, for instance, allowed scientists to measure the expansion of the universe and confirmed the association between neutron-star mergers and gamma-ray bursts. More insights are expected from this type of merged science.

Multi-messenger astrophysics is one of NSF’s “10 Big Ideas” for science. In 2019, the agency awarded $2.8 million to a collaboration among nine research centers, including TACC, to develop the concept of a Scalable Cyberinfrastructure Institute for Multi-Messenger Astrophysics, or SCIMMA.

"Multi-messenger astrophysics is a data-intensive science in its infancy that is already transforming our understanding of the Universe," said Patrick Brady, a physics professor at the University of Wisconsin-Madison and one of the project leads. "The promise of multi-messenger astrophysics, however, can be realized only if sufficient cyberinfrastructure is available to rapidly handle, combine, and analyze the very large-scale distributed data from all types of astronomical measurements.”

TACC’s role in the project is to develop machine learning-based detection and identification algorithms for important astronomical events.

"With the volumes of data associated with multi-messenger astronomy and the timescales needed to follow-up important events, new computational methods will be needed to help quickly detect these key astronomical events, coordinate observations and aggregate information for researchers," said Niall Gaffney, TACC director of Data Intensive Computing and a contributor to SCIMMA.

Designing and training deep learning algorithms to detect important astronomical observations is time-consuming and compute-intensive, requiring supercomputers like those at TACC. But once a neural network is fully trained, it can process astronomical data in real-time, identifying and categorizing signals in seconds. The group believes it may even be possible to use several neural network models simultaneously to analyze multi-messenger astrophysical data.

“This approach will vet new discoveries and shed light on the resilience of different neural network architectures in realistic detection scenarios,” said Zhang Zhao, a TACC researcher and contributor to the project.

In the future, an army of robotic observatories will work in concert when a gravitational wave is detected, training their collective sensors on the section of the sky where the event occurred within seconds of a signal. Rovers will cover greater distances on the surface of Mars with more autonomy, enabling more science. And new features of the Milky Way will be discovered through the use of deep learning models, changing our understanding of our cosmic neighborhood.

“Astrophysics and space science are two fields that have pushed the boundaries of data science for centuries,” said Gaffney. “Using a wealth of data, we learn more about the universe and foster new ways data science can be improved in low-risk environments. From these lessons, we can apply new algorithms closer to home and better classify, navigate, and detect events in the world around us.”

MD Anderson Cancer Center, Oden Institute for Computational Engineering and Sciences, and TACC launch a program to understand and defeat cancer using computational science.

NSF and Dell Giving partner up to expand Frontera to support rapid response to emergencies like pandemics and large-scale disasters.

TACC begins supporting non-classified researchers from the Department of Defense to prototype a software system that lets DoD researchers run simulations offsite at TACC.

TACC provides data and computing for researchers investigating chronic pain and the subsequent dependence on opioids.

TACC deploys one of the four regional subsystems of Jetstream2, a new cloud computing platform for U.S. researchers and students, funded by NSF and led by Indiana University.

Chameleon, a cloud computing testbed for computer scientists co-located at TACC and the University of Chicago, receives funding to operate for four more years with support from NSF.

UT Austin, TACC, and collaborators across the world explore the brain and its neural connections at the highest level of detail to date with support from the BRAIN Initiative.

NSF renews the web-based platform for data sharing, natural hazards simulations, and disaster responses coordination for another five years.

The Oden Institute and TACC secure a major research grant from the Department of Energy to develop predictive computational simulators for an inductively coupled plasma torch.